Creative Control and Deep Learning Generative Neural Networks

Generative models are used to render a variety of artistic outputs, including paintings, music, and even poetry. They have also been used to create new styles and genres of art by blending different styles and techniques from existing artworks.

Generative models are not a replacement for human creativity and skill, but a tool that can assist and inspire creatives to explore new possibilities and push the boundaries of traditional art forms.

What is Generative Model and What It Does

A generative model in the context of art generation using artificial intelligence and machine learning refers to a type of algorithm using deep learning artificial neural networks that can create new and original pieces of artwork, music, or other creative outputs. These models are trained on large datasets of existing artworks, they learn to recognize patterns and common features that are prominent in the input data, and they use statistical methods and probabilistic techniques to generate new outputs that are similar to the input data and conform to set parameters.

What is Generative Art?

Generative art is a form of art that is created through the use of algorithms and computer programs, now often utilizing artificial intelligence and machine learning techniques (in the past, generative art was created by using mechanical devices). While generative art offers artists new and exciting ways to create artwork, it also presents unique challenges in terms of creative control and artistic expression.

When using algorithms and computer programs to generate art without an artistic vision and control, it can be difficult to determine the creative voice of the author of the work. Developing style in this genre is a demanding but interesting task but it is not a challenge unique to this media.

Artistic Challenges and Creative Control

One of the challenges of working with generative art is the level of control an artist has over the output. Generative algorithms often produce outputs that are unpredictable and difficult to control, meaning the artist must be willing to relinquish some control over the final product. However, this unpredictability and certain entropy can also be seen as an opportunity for artists to experiment and discover new forms of artistic expression.

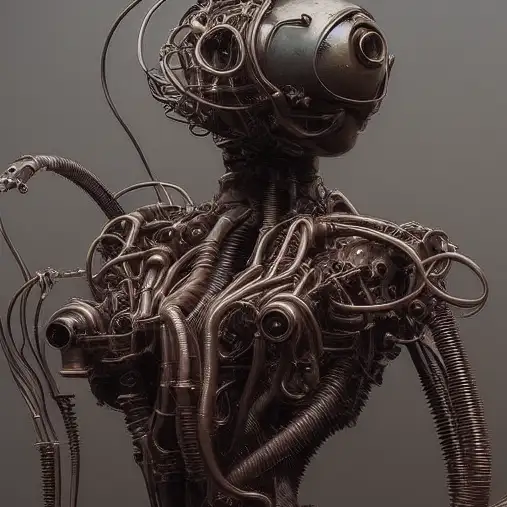

Artificial Intelligence generators are particularly useful for blocking intricate structures of textures and forms—and for creating multiple variant studies

It is important to strike a balance between the limitations of the technology and the creative vision of the artist to produce meaningful and engaging artwork.

Controversy on the AI-Generated Art

It is the responsibility of the artist to be aware of the controversies when using the technology. In many cases, common sense will apply.

However, when encountering opinions, that AI generative art is soulless, it is not an art form at all (and similar media expert eruptions), enjoy a good laugh, and just continue working.

Types of Creative Control

When painting, you need to control three basic things, subject, composition, and rendering of visual style consisting of color tones, a technique used, etc. You will use analogic processes when rendering the visual output with a generative model.

When generating an image, you may use various strategies to control or finetune the result. Repeating and Iteration (finetuning the parameters, using different models), Layering (using image to image as a starting point and changing prompts on each iteration layer), Manipulation of Output (using a tool like chaiNNer to adjust the output and feed it to the next iteration), Image Prompting (adjusting by another conditional prompt, pix2pix GAN), LoRA Finetuning and Offset Noise (generating based on blocking of canvas and prompting), Inpainting, and Outpainting (which are now common options for scaling compositions), Selective Upscaling (adding details on masked part of upscaled canvas). Of course, the most straightforward approach is to use postproduction and retouching. The illustration in the title of this article was created by a combination of these methods.

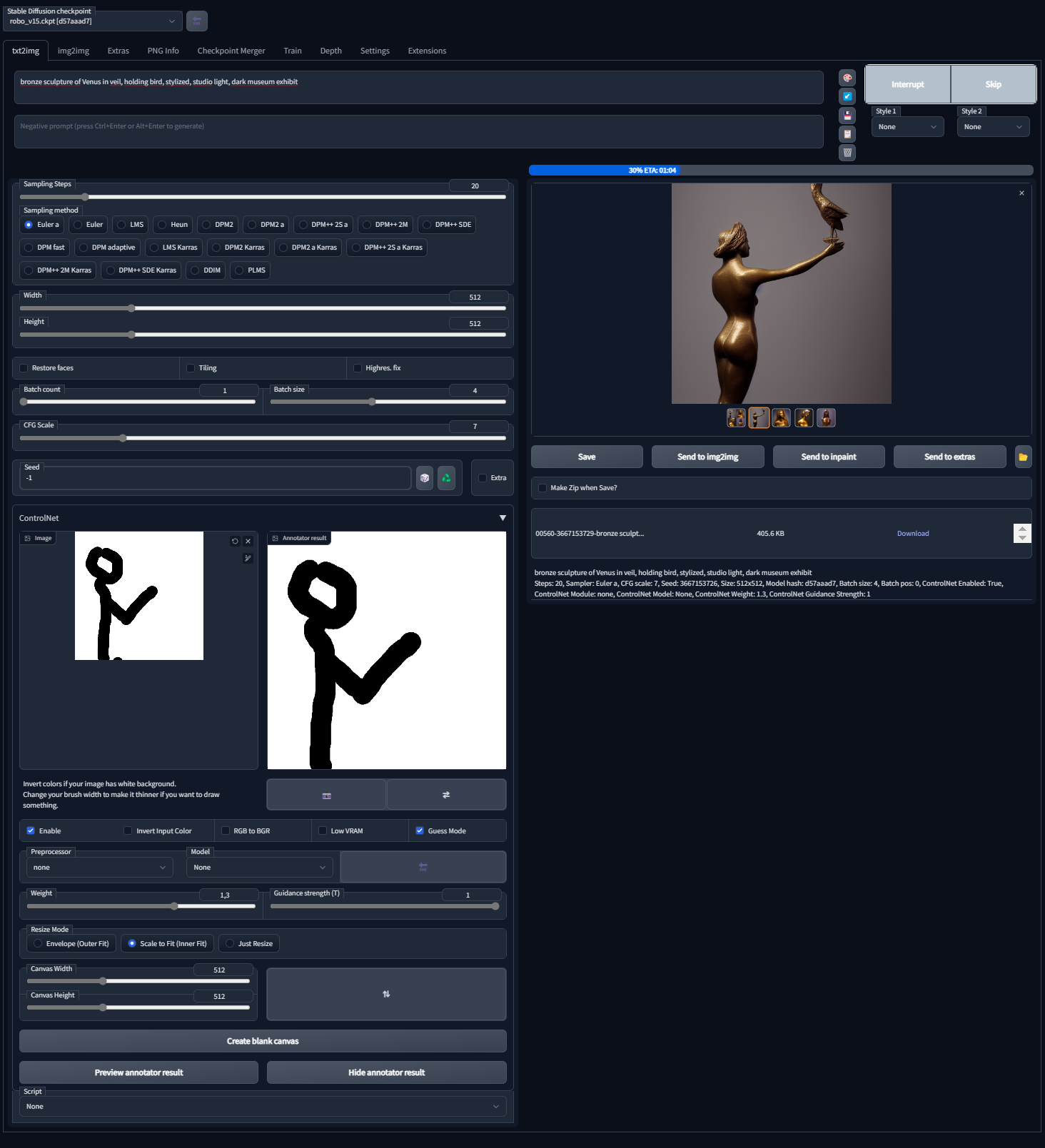

In this example using Stable Diffusion Model and extensions for AUTOMATIC1111, I will demonstrate one interesting option for controlling composition when generating an image.

Brief Tutorial on Composition Control Using ControlNet Extension

In this example, we will experiment with the ControlNet extension for Stable Diffusion (it works with SD 1 model variants at the moment). This allows us to use additional conditions and parameters, and generate images conforming to outline sketch or scribble (and more).

Step 1. Install AUTOMATIC1111

Tutorial on how to run it on local machine is in this article

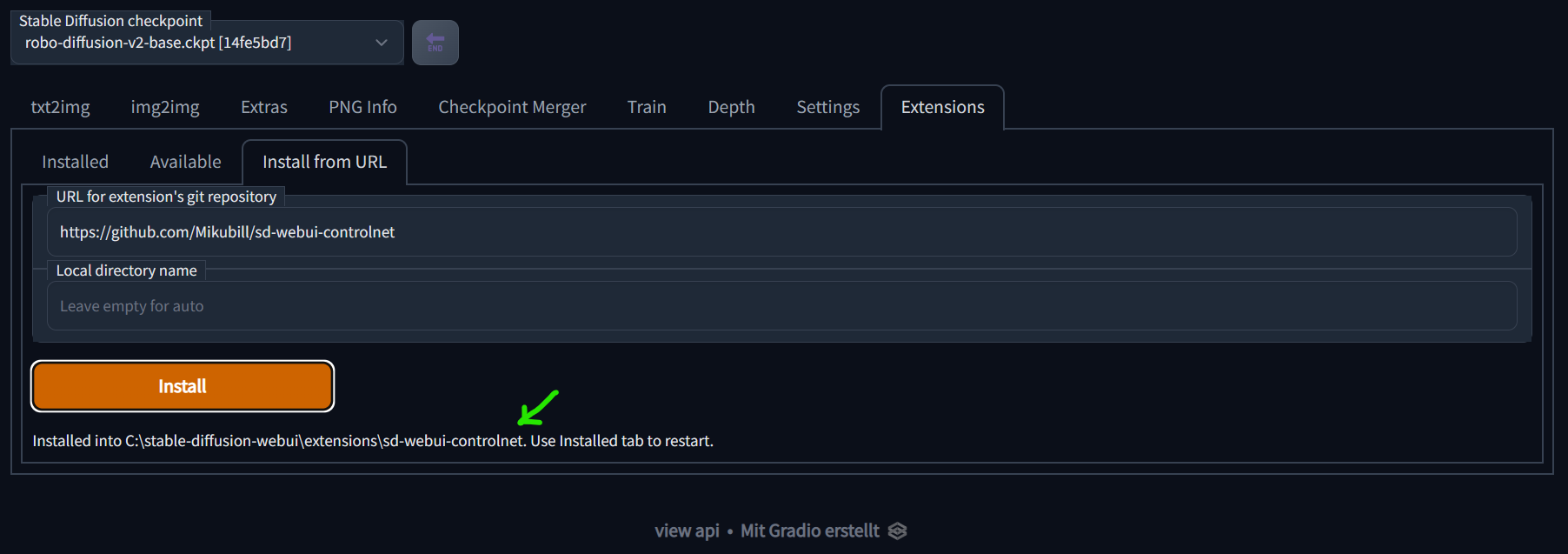

Step 2. Install Extension

In Extensions/Install from URL tab in AUTOMATIC1111 webgui, install ControlNet (neural network structure to control diffusion models) from URL https://github.com/Mikubill/sd-webui-controlnet by putting https://github.com/Mikubill/sd-webui-controlnet into URL place and pressing Install button. After a short while the extension will install into this subdirectory (the path will be of yours stable diffusion webui installation):

Step 3. Download models

Download all .safetensors models from here https://huggingface.co/webui/ControlNet-modules-safetensors/tree/main .

Put them into ControlNet extension folder, into /models subfolder (this is the installation path from the previous step). From Installed tab, choose Apply and restart UI to restart AUTOMATIC1111 webgui.

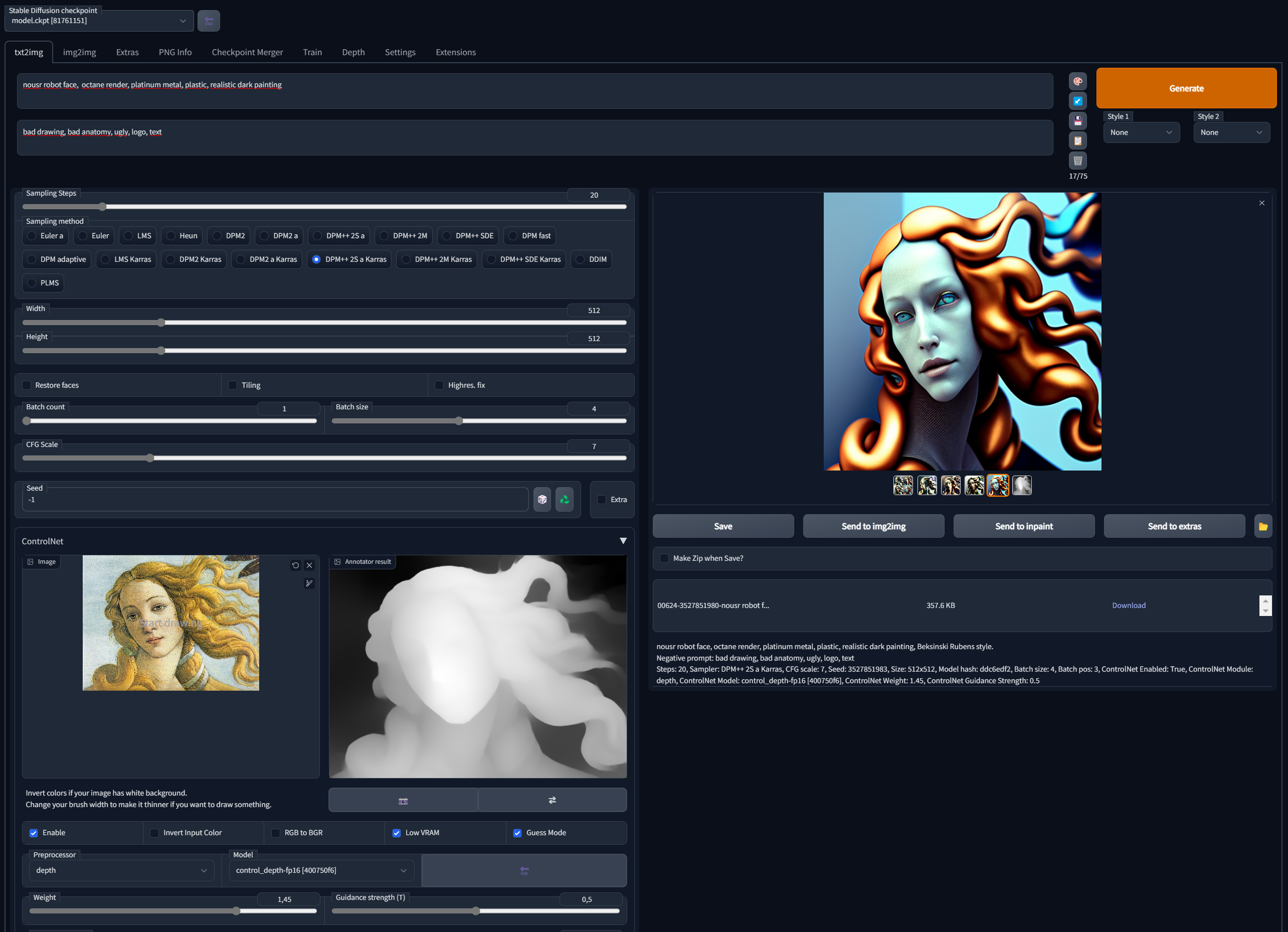

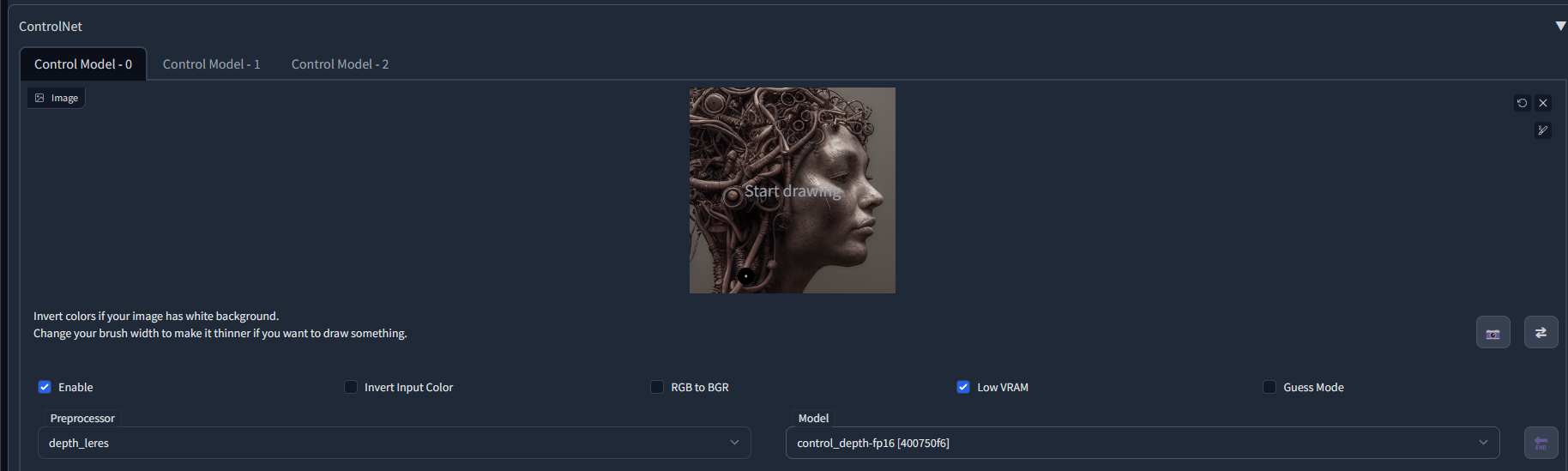

Step 4. How to Use ControlNet

Now you have ControlNet option when you scroll down the main UI view. Experiment with Preprocessor setting (also choose the proper Model for the preprocessor ). The first time you use the chosen preprocessor model, it will download the needed files, which takes some time. Check the console for the processing info.

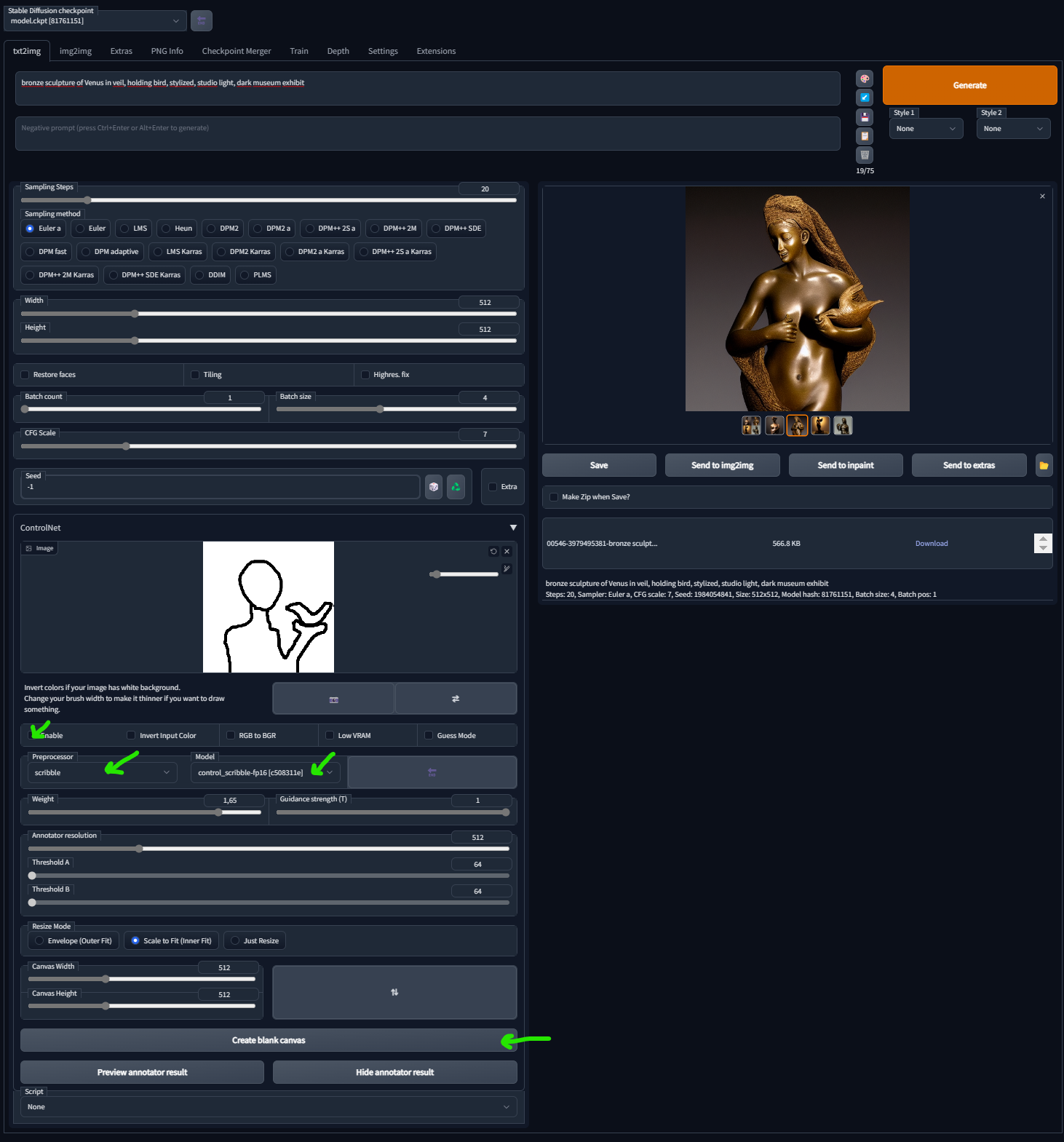

For the control with a scribble Sketch, choose scribble Preprocessor and scribble control Model. Use Create blank canvas button and scribble a sketch composition on the white canvas. Check Enable.

Experiment with various preprocessors and settings to finetune the output and use various strategies.

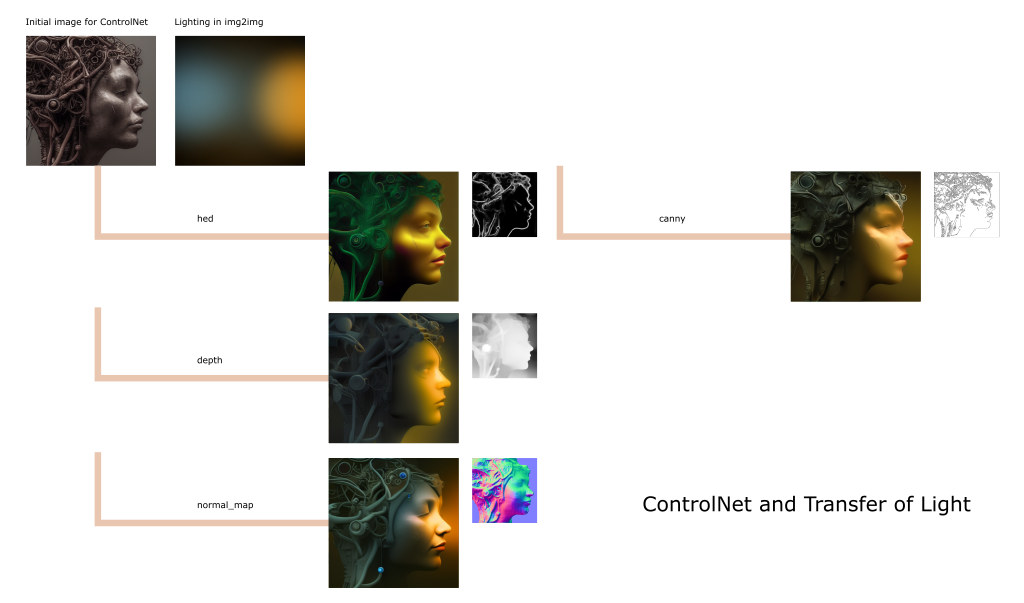

Lighting With ControlNet

You may use ControlNet to control many aspects of the image, including lighting or level of detail (in img2img mode, ControlNet image will serve as source of structure and img2img image will influence the "light").

You can stack multiple ControlNet models to control single image output—the advantage is an option to use several different preprocessors and models at once (if you have enough VRAM). Go to Settings tab, find ControlNet/Multi ControlNet: Max models amount (requires restart). Choose a number 2 or 3 (you may experiment with more). After the restart of UI, you will have multiple ControlNet instances in the stack.

Conclusion

Creating generative art is hard, and time-consuming. It is also demanding on technical expertise and considering the gist of the technology, it can be very frustrating. By developing your skills. experience and continuing education in art, the results can be amazing. To be a good generative artist I strongly suggest studying classical techniques too—and to practice creating good art.