FLUX.1 in Forge UI: Setup Guide with SD/SDXL Tips

In our previous dive into FLUX.1 on ComfyUI, we discovered the model's potential. Now, let's harness that power within Forge's streamlined interface. This guide walks you through setting up FLUX.1 on Forge and explores new options in the latest Forge version to enhance SD and SDXL image outputs. Let's get started!

How to Install Forge

- Install free version control manager git from git-scm.com

- In target folder, open cmd terminal and run

git clone https://github.com/lllyasviel/stable-diffusion-webui-forge.git - Run

webui-user.batto download and install dependencies, this finishes the installation and downloads a base SD 1.5 model for tests into \stable-diffusion-webui-forge\models\Stable-Diffusion (this is where you put your other SD/SDXL/FLUX models you download.

Updating Forge

To update, run git pull in cmd terminal in your \stable-diffusion-webui-forge\ folder.

FLUX.1 in Forge

FLUX.1 Models and Encoders

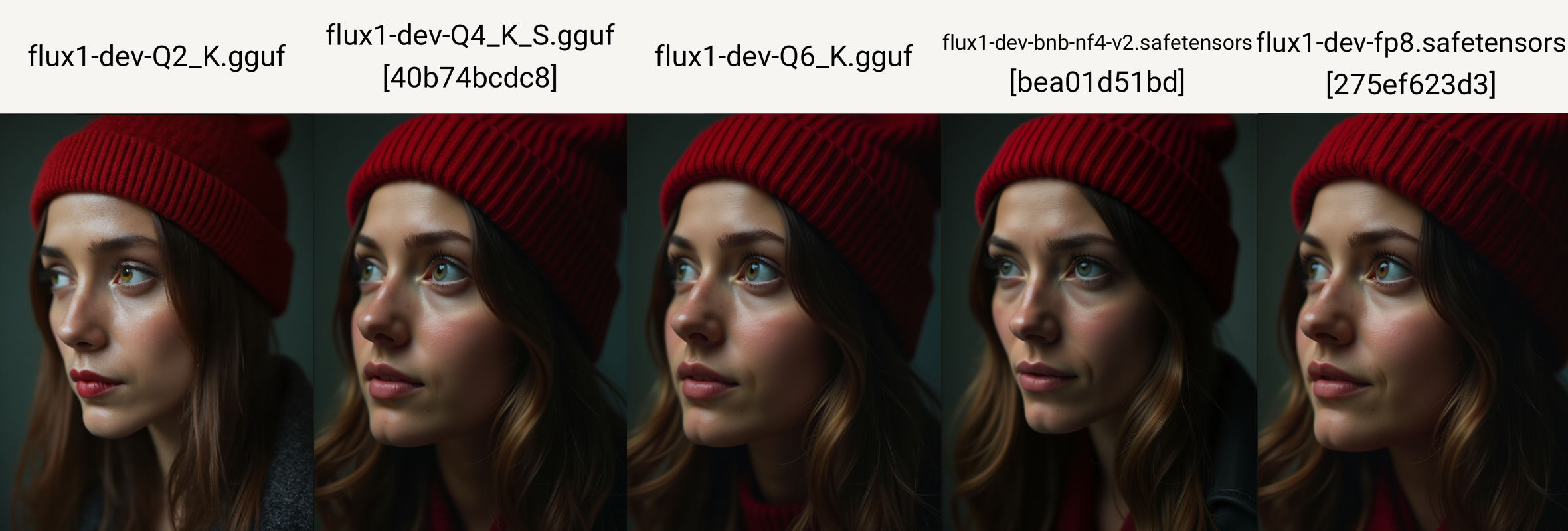

There are several versions of FLUX.1 models. Beside schnell (fast distilled model) and dev (for local rendering) there are distilled versions for use with GPU with lower VRAM.

The models are referred to as FP16 or FP8 (floating point), and quantized NF4 (4-bit non-floating point). BNB ((BitsandBytes means it is a low-bit accelerator) model. GGUF format is a binary format that is optimized for quick loading and saving of models.

Note that new generations of models like Flux and SD 3.5 produce substantially better results when using longer "captions" in natural language than shorter "tags" to describe the scene. Use LLM chats to expand your prompts or a tool like ArtAgents.

Installation of Models

For a fast start, you can download theFLUX model into "\stable-diffusion-webui-forge\models\Stable-diffusion", without the need to download the VAE and encoders separately:

- https://huggingface.co/lllyasviel/flux1-dev-bnb-nf4/tree/main , download the last version (f.i. v2). This is the NF4 model working in Forge.

- Now just set the Forge profile to FLUX and select the model from the dropdown, and generate an image.

Standard FLUX dev models need more files to work in Forge. Download the other models into the proper folders:

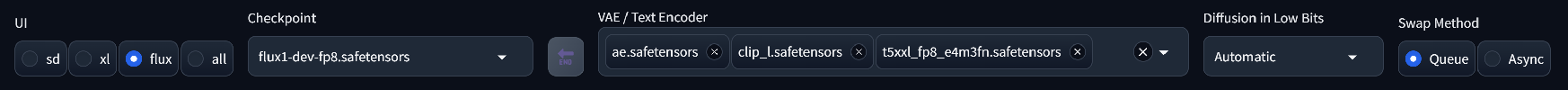

- VAE: https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/ae.safetensors into folder \stable-diffusion-webui-forge\models\VAE

- Encoders: https://huggingface.co/comfyanonymous/flux_text_encoders/tree/main into folder \stable-diffusion-webui-forge\models\text_encoder

- FLUX.1 Models (see below): into folder "\stable-diffusion-webui-forge\models\Stable-diffusion"

- FLUX DEV BNB NF4 https://huggingface.co/lllyasviel/flux1-dev-bnb-nf4/blob/main/flux1-dev-bnb-nf4-v2.safetensors

- FLUX MODELS https://huggingface.co/Kijai/flux-fp8/tree/main (destilled to fit 12GB)

- FLUX QUANTIZED https://huggingface.co/silveroxides/flux1-nf4-weights/tree/main , https://huggingface.co/city96/FLUX.1-dev-gguf/tree/main

Flux-schnell/dev Differences

Flux-dev has a different (non-commercial) license. This should not affect you if you are an end user running the model locally to generate images (read the license nonetheless). Flux-schnell is very fast (generating nice images in just 3-5 steps) and adheres slightly better to prompts, but Flux-dev offers more creative options since it can use both distilled CFG and normal CFG to affect image output.

ControlNet and FLUX

There are controlnet models for FLUX, which are not yet working in Forge ControlNet at the time of writing this article.

LoRAs and FLUX

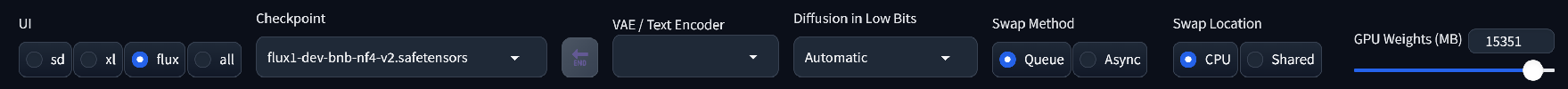

To run LoRAs trained for FLUX in Forge, set "Diffusion in Low Bits" to Automatic (fp16 LoRA). When using GGUF models, go with "Q4_K, Q6_K" models (not K_S) for faster render (results may be affected by your VRAM size). Dev and Schnell FLUX models should work.

Hyper-SD LoRAs opens other possibilities for experimentation, read more in this recent article on Hyper-Flux (Hyper-SD).

Errors and Issues

I got black or grey outputs at the end, but the image renders during the steps

You need to add proper VAE for the base model you use. For flux1-dev-fp8.safetensors model, it will be ae.safetensors from https://huggingface.co/black-forest-labs/FLUX.1-dev/blob/main/ae.safetensors

When trying to generate image with FLUX model, you get AssertionError: You do not have CLIP state dict!

"AssertionError: You do not have CLIP state dict!" or "AssertionError: You do not have T5 state dict!" or "AssertionError: You do not have VAE state dict!" means you need to add models to "VAE / Text Encoder" UI list (see Installation of Models). The models need to be in proper folders.

Diffusion in Low Bits and SDXL/SD Experiments

In the new version of Forge there is a dropdown in "Flux" and "All" UI profiles where you can adjust Diffusion in Low Bits. Interestingly, it will affect all SD models (SD/SDXL/FLUX) and can output a better image with some experimenting. At the time beiing you can choose between Automatic. Automatic (fp16 LoRA), bnb-nf4, float8-e4m3fn, float8-e4m3fn (fp16 LoRA), bnb-fp4, float8-e5m2, float8-e5m2 (fp16 LoRA).

Note: Diffusion in Low Bits info does not save into the image meta yet

Conclusion

While the rapid recent evolution of the Forge UI and the novel FLUX.1 model (another addition to the Stable Diffusion family) has undoubtedly pushed the boundaries of AI art generation, it's essential to acknowledge that the journey is not yet entirely smooth. Users might encounter occasional hiccups due to the software's developmental stage, such as some issues with ControlNet models (which are currently also under development).

Additionally, the speed and hardware requirements of the novel models (FLUX, SD3, AuraFlow) are substantial. However, with the emergence of several distilled models that fit into the VRAM of consumer graphics cards, we can now experiment with the new base model. Fine-tuning and LoRA training further open up possibilities for this new SD model and we will soon see if the FLUX models will be as flexible as SD 1.5 and SDXL models.

References

- BNB https://github.com/lllyasviel/stable-diffusion-webui-forge/discussions/981

- GGUF, NF4 and quatizations https://github.com/lllyasviel/stable-diffusion-webui-forge/discussions/1050

- FLUX gguf conversions https://huggingface.co/city96/FLUX.1-dev-gguf/tree/main

- FLUX Black Forest Lab https://github.com/black-forest-labs/flux

- FLUX quantized NF4 https://huggingface.co/silveroxides/flux1-nf4-weights/tree/main

- new: FLUX GGUF schnell conversions https://huggingface.co/lllyasviel/FLUX.1-schnell-gguf/tree/main

- new: FLUX GGUF dev conversions https://huggingface.co/lllyasviel/FLUX.1-schnell-gguf/tree/main