FluxTurbo vs. HyperFlux LoRAs: Generate FLUX.1-dev Image in 4-9 Steps

FluxTurbo is another solution to reduce generation times when using the FLUX.1-dev generative AI model. It can cut generation time in half or more. The technique uses distilled LoRAs, similar to the Hyper-SD/HyperFlux method.Considering that FLUX.1-dev typically requires 20-30 steps to produce a final image, this technique presents a promising solution. We will explore this further in this tutorial-review article.

Four Steps, Really?

You can achieve impressive results with just four steps (see examples). For even more precise anatomy and details (hands), consider increasing the steps to five or more. In general, the optimal range for generating high-quality images is 7-9 steps.

Installation

Download the files into your LoRA folder.

- FluxTurbo Alpha (name teh .safetensors model as you wish) https://huggingface.co/alimama-creative/FLUX.1-Turbo-Alpha/tree/main

- HyperSD (Hyperflux) https://huggingface.co/ByteDance/Hyper-SD/tree/main

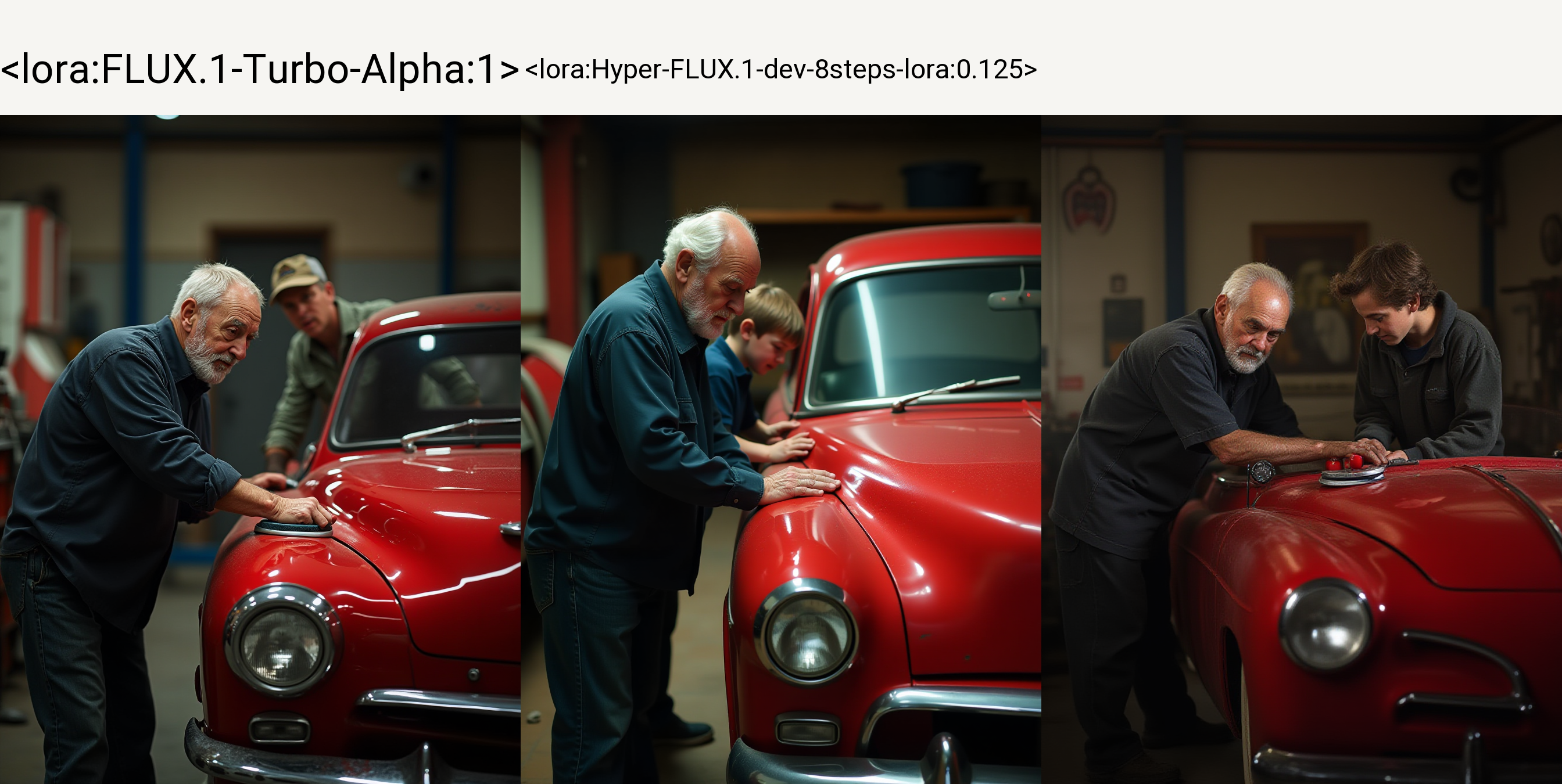

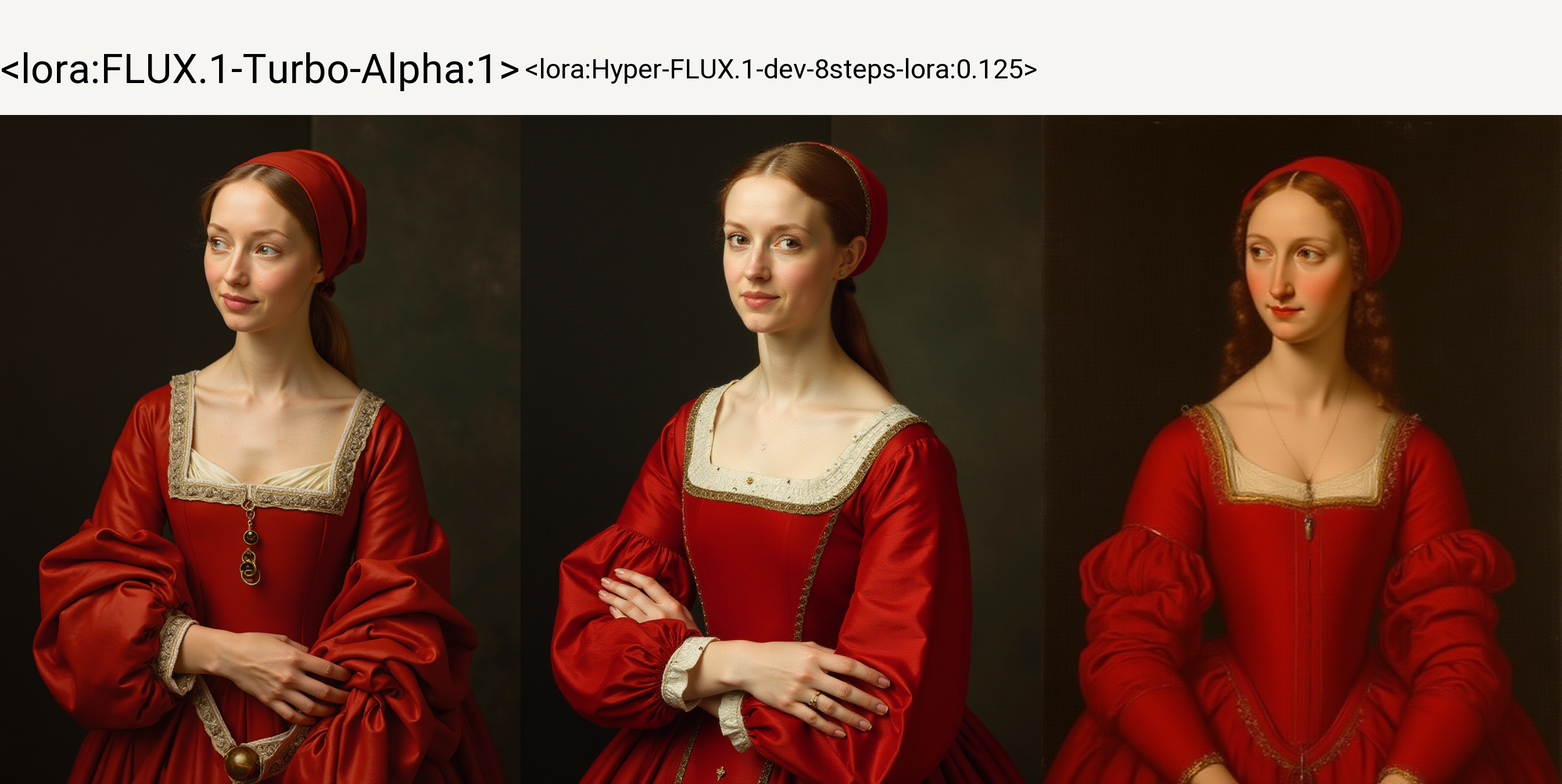

FluxTurbo Alpha is used with standard weight 1.0, HyperSD needs weight 0.125 (1.3 in ComfyUI).

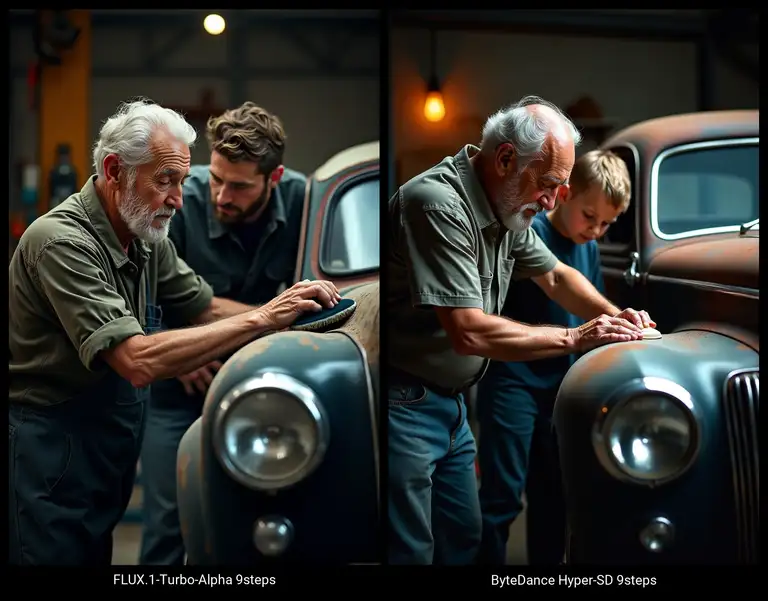

Comparison Overview

((2 persons, old man Josh with his son Jake repairing a car)), vintage car,(Josh is polishing the car, Jake is watching him ready to help), chiaroscuro, photography, masterpiece, best quality, 8K, HDR, highres, front to cameraAs you can see, FluxTurbo tends to produce finer details at earlier stages. Aesthetically, FluxTurbo's output is comparable to that of an 9-step HyperSD (HyperFlux) model. In this case, FluxTurbo is better in realism (see the car details).

As prompts become more complex, the outputs generated by FluxTurbo and Hyper-SD are likely to diverge more significantly.

(2 persons in dialogue) an older woman with red lipstick in a hurry is turning back with a confident stride, talking to a male bald friend with glasses looking at a book, ((busy)), streets of paris,(crowd before bookshop), , chiaroscuro, photography, masterpiece, best quality, 8K, HDR, highres, front to cameraAs it seems both FluxTurbo and HyperSD tend to emulate a higher number of steps (than the standard 20) used for output in the standard Flux-dev model.

Find more examples and workflows on sandner.art github FluxTurbo section.

Hyperflux GGUF Checkpoints

You can download GGUF versions of the models (usable as a Flux checkpoint and not needing the LoRAs). Download link in References.

HyperFlux and Styles

Both techniques allows more options for using styles (just by prompting, without a specific style LoRA) than Flux.1-schnell model. The lesser number of steps opens more space for experimentation. Read more about using styles in Flux in the next article.

Conclusion

Both FluxTurbo and HyperFlux LoRAs deliver on their promises. FluxTurbo is slightly faster (approximately 10%) and tends to produce better details at lower step counts. However, their quality becomes comparable around 8 steps, where both solutions generate high-quality output.

Compared to Flux.1-schnell (which can produce good results in just 3-5 steps), FluxTurbo offers the advantage of allowing you to use Flux.1-dev-specific features like CFG and Distilled CFG. This technique also shows potential for inpainting applications.

Importantly, both FluxTurbo and HyperFlux LoRAs are compatible with Forge and ComfyUI (use it as a LoRA model). And both LoRAs are a great option for local generations—especially with slower GPUs and limited resources.

References and Resources

- AlimamaCreative Team Flux.1 Turbo Alpha https://huggingface.co/alimama-creative/FLUX.1-Turbo-Alpha/tree/main

- Workflows on model page above, or check my experimental workflows on sandner.art github (/FLUX/FLUX-TURBO-ALPHA)

- HyperFlux GGUF models (quantized versions of the full Flux-dev models with Hyperflux): https://huggingface.co/martintomov/Hyper-FLUX.1-dev-gguf/tree/main