High Dynamic Range (HDR) in SDXL Stable Diffusion Models

The goal of High Dynamic Range (HDR) is to reproduce a scene with increased detail in both the bright and dark areas, allowing for a more realistic and visually striking representation. It aims to somehow mimic the tonal range of our eyes. We aim to avoid large pools of extremely dark or light areas, which result from under- or overexposed images. HDR can contribute to a more natural appearance in images and allow more freedom for color or tone stylization during post-processing. This technique is often used in professional photography.

Exposure Bracketing

Bracketing in photography can be explained as taking shots, usually in unprocessed RAW format, in rapid succession with various exposures. The goal is either to choose the best-exposed image or to create an HDR photo with a higher tonal range from all those photos. We will apply a similar concept using the Stable Diffusion script.

Expanding the Dynamic Range Using Script

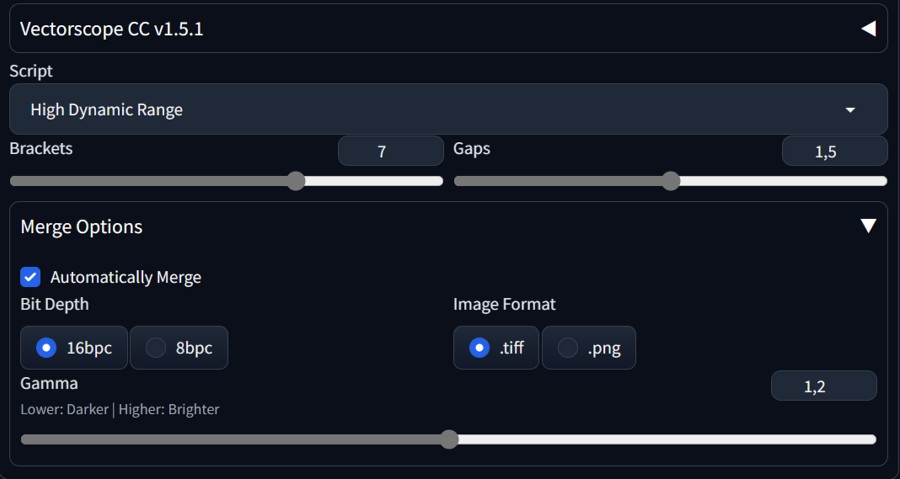

We will employ VectorscopeCC to the rescue. This extension modifies diffusion with adjustments to color, brightness, and contrast (as in offset noise). Additionally, it includes a script with a deceptive name, 'High Dynamic Range,' which effectively outputs just that.

You can install VectorscopeCC extension from Extensions/Avalable/Load from. Find it in the list and Install. Restart A1111. You will find the script under Script list at the bottom of txt2img and img2img accordion.

Pros and Cons

You can use Hires fix with lower Denoising strength (0.1-2) to avoid bigger changes between bracket images, while losing some reconstructive power of the upscale

Hires fix upscale and ADetailer tricks are limited due to the substantial change in the output image between brackets. The adjusted image after upscaling is not entirely consistent, as evident after the merge of bracket images. This limits the effective output resolution, because you need to generate directly into the final res. Some details and inconsistencies can be easily retouched in a photo editor. While ControlNet can protect prominent facial features, in most cases, I believe it would be an overkill with uncertain result. With the script you can create:

- 16-bit/8-bit image bracket merge

- set of images with various tonality

- it can not work with 32-bit HDR (yet?)

Although the scripts does not have an official SDXL support, it works with brightness.

Important Tips

- Generate only 1 batch count/size at once

- As mentioned above, keep denoising low when upscaling images/brackets

- Images with very sharp shadows defined by the prompt will not benefit from the script

- you can use the script effectively in img2img, just insert an image, set size and low denoising strength and set the script

Can VAE Solve the Dynamic Range?

Possibly. I am not aware of a VAE solution that would work without the flattening of ranges, giving the output just a RAW look, but without the needed function.

Other Approaches

You can generate the HDR "bracket" images manually using the same seed and different brightness, and join them using a dedicated tool for HDR merging (usually commercial or prehistoric). Darktable will not merge non-RAW, but you can do exposure blending in GIMP:

Manual exposure blending in GIMP

Example will use 2 brackets, dark and light.

- Import both images into GIMP as layers. Lighter image is on top.

- Change color Image/Precision to 16-bit floating point color space

- Add "Greyscale copy of layer" automatic mask to the top layer from "Add layer Mask" button in layer docker.

- Merge.

This is a cheaper version of a true HDR merge, but it may be suitable for your needs. You can also manually change the mask for better details, and get away with less brackets.

Conclusion

It is an interesting concept (VectorscopeCC and its script are awesome), and in a way, it works. If the differences between bracket exposures do not alter the composition, and the Stable Diffusion outputs themselves have a higher tonal range, the process would be almost perfect. Due to the SD process, some flat color pools or artifacts still can occur, but overall you can create nice HDR approximation.

In the future, as models allow for more consistent details, I can envision a method of creating HDR images in Stable Diffusion, even without a specific model generating 16-bit color range images. In the meantime, this workflow serves as an intriguing starting point for experiments.

References

- VectorscopeCC https://github.com/Haoming02/sd-webui-vectorscope-cc

- Illustrations created in Cinematix SDXL Stable Diffusion Model/Merge