Prompting Art and Design Styles in Flux in Forge and ComfyUI

While I prefer creating unique interpretations of and developing a unique art style over mimicking existing artist styles in generative AI, styles remain crucial for guiding the diffusion process and achieving good compositions. Even the base model of SDXL requires style descriptions to even create decent image. Flux creates nice images out of the box with minimal style description—but this may pose an issue later. The default hyperrealistic style will peek out from time to time unless it is actively prompted out, especially in quantized versions of Flux.

"Flux does not copy artists' styles too closely.

I think this is good."

Although Flux often fails to specialize in replicating specific painterly styles, unlike SD or SDXL, much can be achieved through clever prompting. This article will demonstrate various options for using, blending, and importing styles in Forge and Comfy UI. While the workflows are designed for Flux, similar principles can be adapted for SD/XL models.

Prompt Your Way Out

The simple prompting "in the style of" does not work very well in Flux. I got best results by doubling down with a formulations like "by XY, XY art style" combined with description of the technique (see example workflows) in the beginning of the prompt. You can also success with a deliberately verbose description of the artistic technique in natural language.

SD Style Tags Example

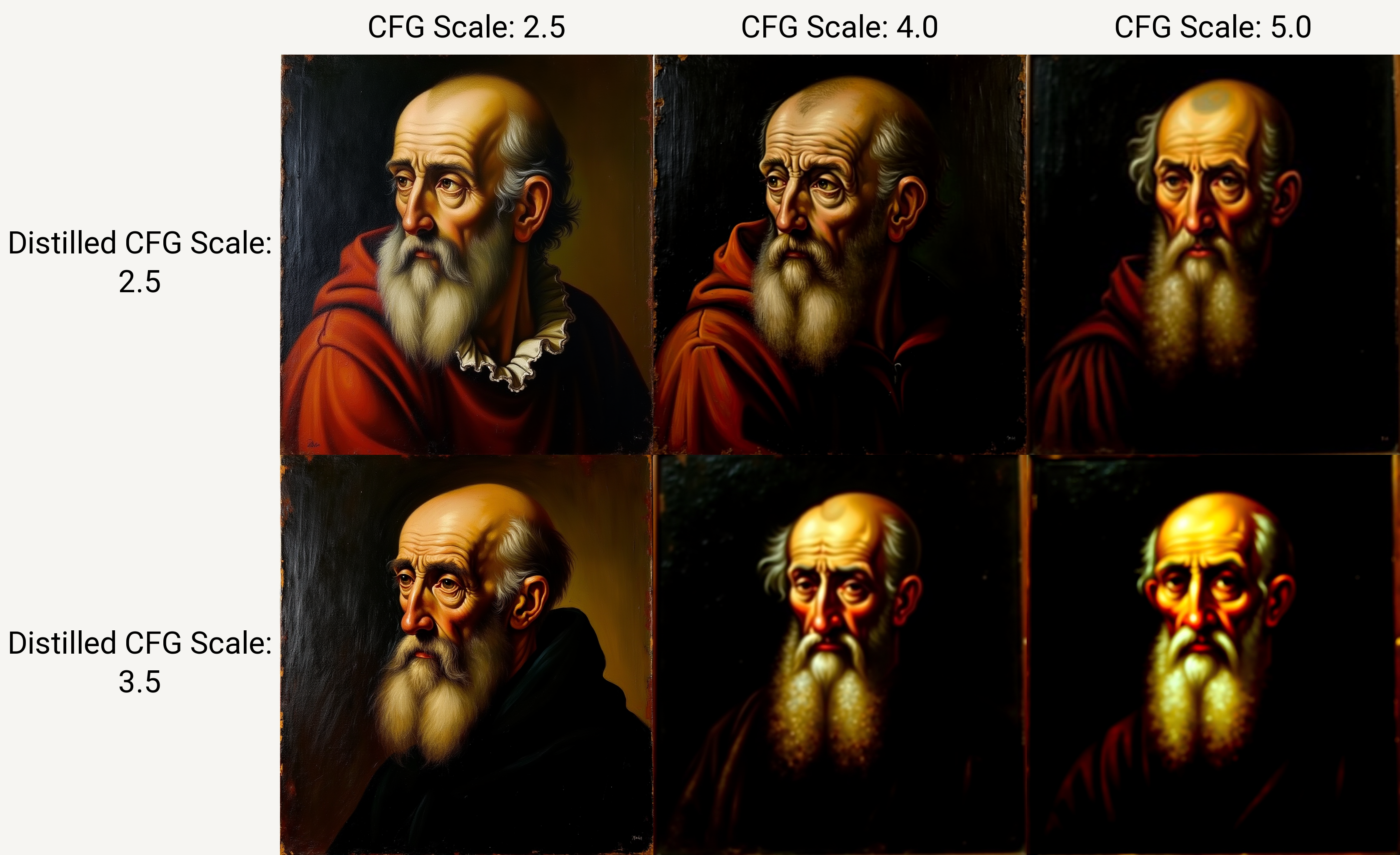

This method requires some CFG tricks in Flux. Using negative prompt and fine tuning CFG Scale and Distilled CFG Scale helps to hold the artistic style. You may experiment with the Flux style-prompting workflow.

In ComfyUI, using negative prompt in Flux model requires Beta sampler for much better results. This effect/issue is not so strong in Forge, but you will avoid blurry images in lesser steps.

Natural Language Example

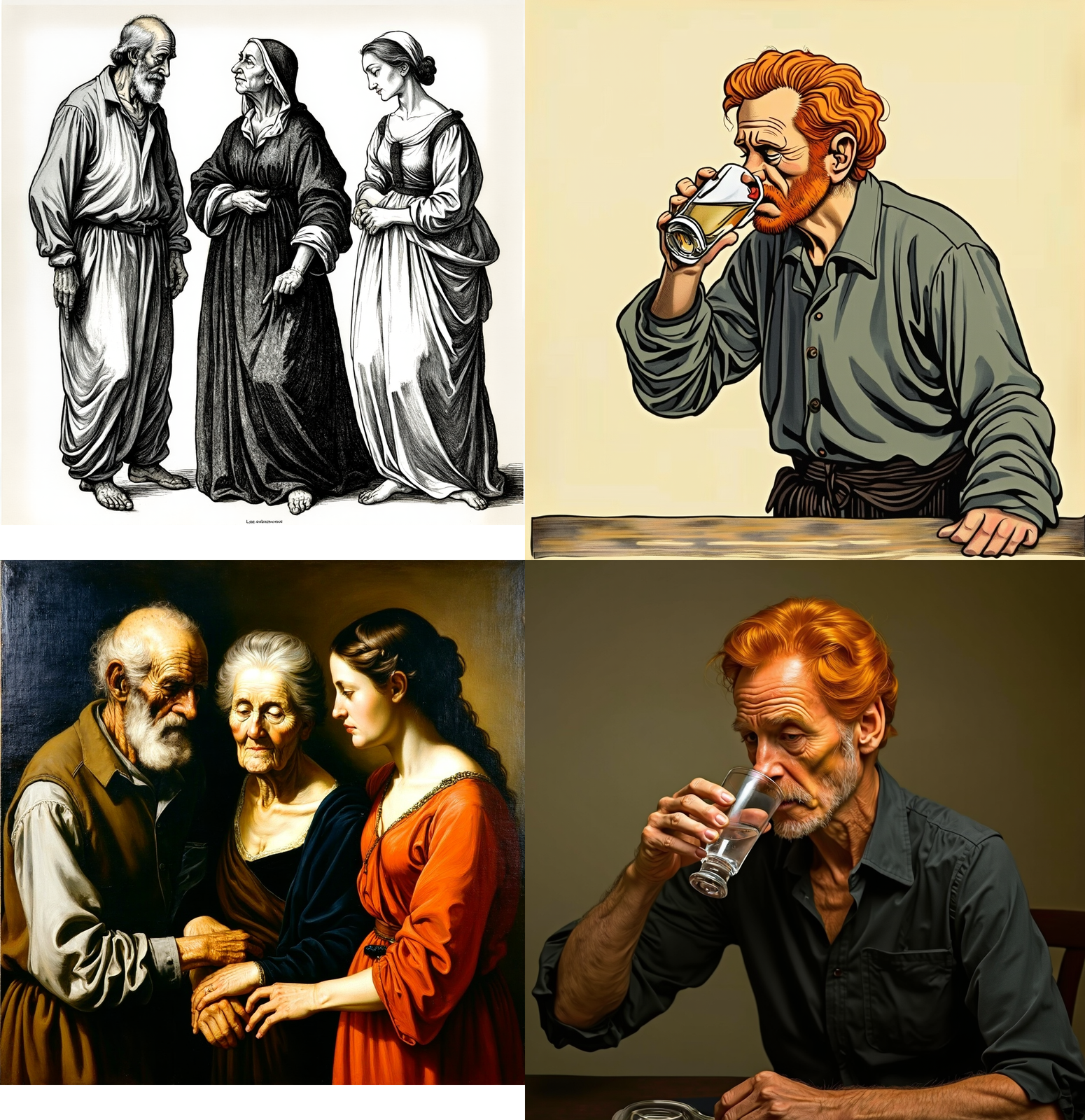

Let us try this generally defined renaissance painting style.

subject: rich woman, red dress

style:

The painting features precise detail, realism, and naturalism. Figures and objects are anatomically accurate and display a deep understanding of perspective, creating depth and dimensionality.

Lighting is soft and diffused, often from a single source, with gentle shadows enhancing forms and textures. Chiaroscuro is subtly used to highlight focal points.

The color palette is rich and vibrant, favoring earthy tones and jewel-like hues. Colors are applied in thin, translucent layers for a luminous effect. Sfumato blends colors and tones without clear outlines, creating smooth transitions.

Composition follows classical principles, with balanced and symmetrical arrangements. The golden ratio and geometric principles guide element placement, ensuring harmony and order. Backgrounds are detailed, often including landscapes or architecture for context and depth.

Brushwork is delicate and controlled, with fine details and intricate patterns. The overall effect is refined and elegant, reflecting ideals of beauty and harmony.

We can absolutely use this approach (especially with mixing prompts with concept fusion), but we can see how the other attributes seep into the image. The photographic bias of Flux can be challenging to overcome, especially as the number of prompt tokens increases.

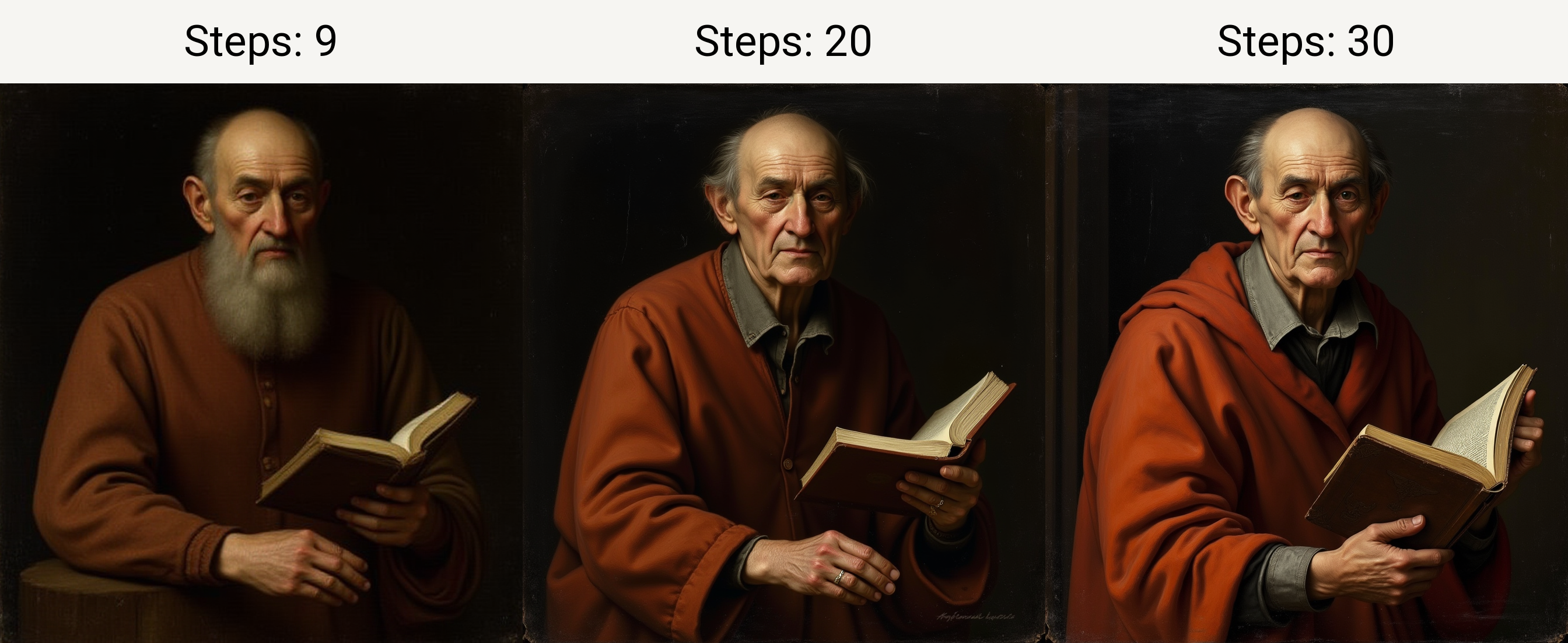

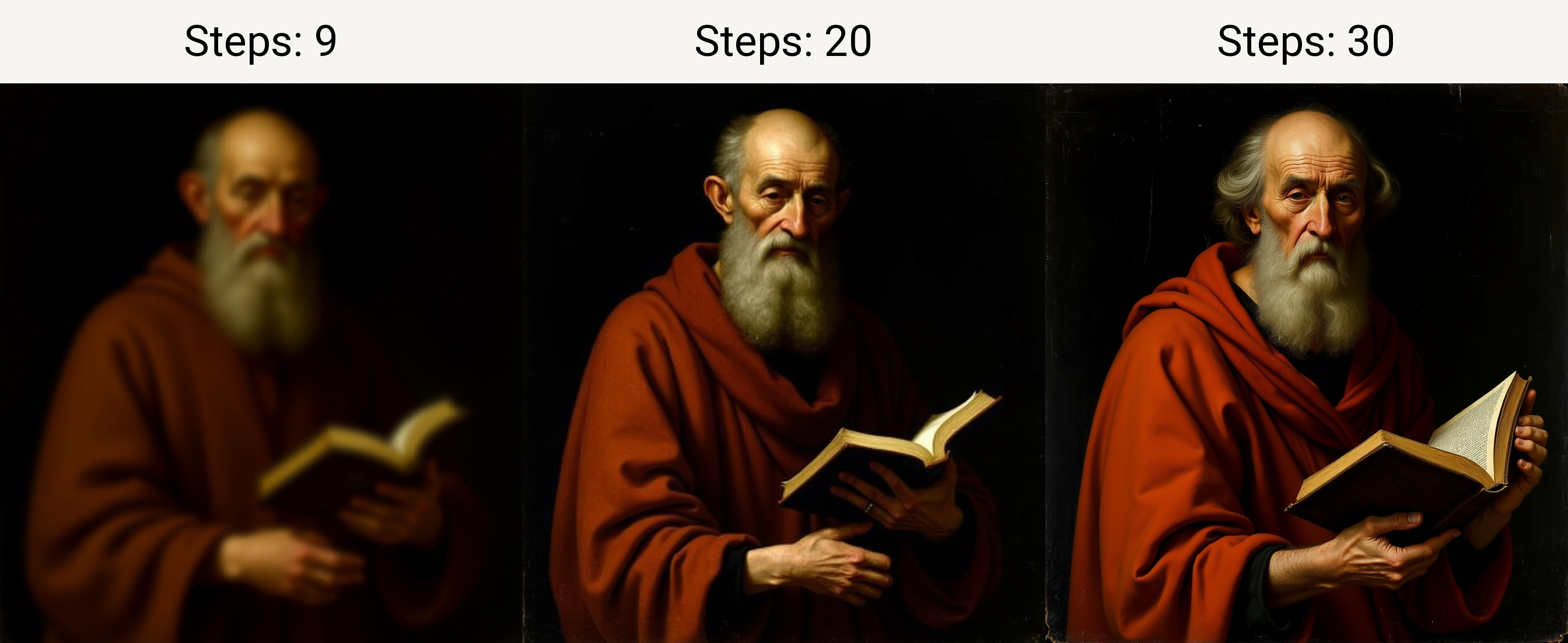

Tip for Flux-dev

Using lower number of steps with style prompting can help with painterly styles (try to use 8-10 sampling steps instead of 20-30) and get quick results:

Using SDXL Styles in Workflows

The combinations of styles are already prepared in ComfyUI node Milehigh Styler (install with Manager). The preview of styles is at https://enragedantelope.github.io/Styles-FluxDev/ You may test these in Forge by converting .json format into .csv. Or just use my Flux/SDXL/SD styles and style conversions (Resources).

Regardless of Flux differences, many SDXL styles will work nicely.

Importing Comfy UI Styles to Use in Forge/A1111 (and Back)

How to convert .json styles into .csv styles (Forge) and back:

- download styleconvertor from github

- put you style files into the same directory

- from the same directory, run terminal

cmd python styleconvertor.py- Follow the instructions

Use the process to convert .json styles for use in Forge (you may convert .json to .csv back and forth). After conversion, put the .csv file into the Forge main folder. Rename the style file to styles.csv to show in the Forge style list!

There is also another method for style transfer, using a guiding image instead of a style description in the prompt:

Transfer Styles: Using an Image as a Prompt

IP-Adapter (image prompt adapter) model can be plugged into diffusion models (like a Controlnet model) to enable image prompting. This adapter can be used with other models finetuned from the same base model (so you need a separate version for SD, SDXL, or Flux). IP-Adapter is using decoupled cross-attention to add a separate cross-attention layer just for image features instead of using the same cross-attention layer for both text and image features.

Advantages of IP adapter: Creates interesting style mixes

Disadvantages: Slower generations (plus IPA requires more steps for the effect), style transfer in Flux is a hit and miss

The model is available here: XLabs IP Adapter to download. Place the model to your \models\ControlNet folder for Comfy UI.

Advanced IP Adapter Node (Comfy) to Enforce Style

Note that for IPA to get to the business, it needs around 50 steps (25 with Flux Turbo). Begin with lower strength, to allow the main prompt to affect the result, otherwise the guiding image will take over the whole composition (and you will do better with img2img experiments).

Comfy UI

Use simpler Apply Flux IP Adapter, or Apply Advanced Flux IP Adapter for more control over generation.

Advanced IP Adapter Node allows to fiddle with the intensity of the effect during the diffusion. Moreover, you may try the smoothing options. "First half" begin 0.5 end 0.9 will go higher with the strength in the first half, then stays at 0.9. "Second half" at this setting will start to increase the strength from 0.5 to 0.9 in the second half. "Sigmoid" will go from 0.5 to 0.9 and back (the effect is not so prominent and is similar to "Second half").

Forge (Not Yet)

NOTE: NOT YET FUNCTIONAL in txt2img at time of writing this article.

Flux: ControlNet IP Adapter has no effect in Forge in txt2img or img2img, sice a new CN code is not yet ready.

Getting "mat1 and mat2 shapes cannot be multiplied (1x1280 and 768x16384)" Error

- Download this clip vision model.safetensors https://huggingface.co/openai/clip-vit-large-patch14/tree/main to your clip vision folder and rename to

clip-vit-large-patch14

IPAdapter for Flux uses a rather outdated CLIP vision model (see above). This may be the reason for the uneven results when using this IPA. You may try to bypass the IPAdapter solution and simply use a better vision model to create a style prompt from your input image. IPAdapter for Flux still often creates interesting images, so it may be worth experimenting with.

If you are going for more exact IPA style transfer, you may consider using SDXL or SDXL guided Flux workflows.

"If you want a specific style which hold in many scenarios, use (or make) a good LoRA model."

Conclusion

In this article, we have explored the capabilities of using styles in Flux and provided several workflows for experimentation. Clever prompt engineering is particularly beneficial with the Flux model, as it can reduce the number of LoRAs (Low-Rank Adaptation models) required, thereby conserving computational resources and avoiding the potential negative effects of using too many LoRAs in a single workflow.

Using IP Adapter for styles is not very effective in Flux model workflow (considering many sampling steps needed to produce a result), although it can generate interesting outputs. I have a suspicion it has a very limited range of styles it can detect. This may change with better IPA (or compatible CLIP vision) available in the future.

Creating custom prompts or combining styles can require effort, but it's a rewarding approach for experimenting with any generative AI model, with Flux it can become a very challenging task too.

Resources and References

- Example workflows with style prompts for Flux (sandner.art github)

- SDXL/Flux Styles preview (extensive list with examples): Flux Style Test Gallery

- Xlabs IP Adapter https://huggingface.co/XLabs-AI/flux-ip-adapter/tree/main

- CLIP Vision for XLabs IP Adapter https://huggingface.co/openai/clip-vit-large-patch14/tree/main

- IP Adapter pipeline explanation https://huggingface.co/docs/diffusers/main/using-diffusers/ip_adapter

- My experimental Forge styles.csv file with Milehigh Styler styles included for compatibility test