Qwen Image and Edit: Local GGUF Generations with Lightning

Qwen image is Multimodal Diffusion Transformer (MMDiT) open-sourced model with very interesting capabilities for both image generation and powerful image editing, including a better font handling and understanding composition instructions. Unlike cloud solutions that lock you into API pricing and usage limits, or ControlNet pipelines that require multiple models and preprocessing steps, Qwen-Image-Edit-2509 delivers end-to-end editing in a single model that can run entirely on local hardware. I was testing it on NVIDIA RTX A4000 (16GB) and the workflows I am providing are using 4-bit quantization versions of Qwen unet models and encoders (workflows, notes and test prompts are on github repo). Full model (without quantization) recommends 24GB+ VRAM (e.g., RTX 4090) and 64GB system RAM for optimal performance.

This article is a guide to installing and using both Qwen-Image (for generation) and Qwen-Image-Edit-2509 (for advanced editing) on your local GPU using GGUF quantization for optimal VRAM efficiency. You may use just Qwen-Image-Edit-2509 also for the image generation, but I suggest you test both versions (and also diverse quantizations for best results). You should be able to run the models more effectively on GPUs with 20-24+GB of VRAM, however you can run the models under 16GB with some performance penalty).

Intro

As of October 2025, leading commercial AI generative models dominate the cloud-based image editing market with advanced, user-friendly UI tools. Google Gemini with Nano Banana (Gemini 2.5 Flash Image) excels in text-driven edits, offering features like character consistency, object replacement, background swapping, style transfer, attempts on rotating objects in scene (3D scene conversion). Microsoft Copilot, powered by DALL-E 3, also delivers multimodal editing for creative workflows. Adobe Firefly continues to lead with professional-grade editing, leveraging its integration with Creative Cloud for complex design tasks. These platforms set the standard for commercial applications and novel techniques implementations, aiming its workflows at design tasks. Meanwhile, open-source alternatives have struggled to match their precision and ease of use.

We've seen Stable Diffusion's InstructPix2Pix, Semantic Guidance methods, and Cosine-Continuous Stable Diffusion XL attempts to solve this, and countless ControlNet implementations that require complex preprocessing pipelines. Image-to-image workflows demand careful parameter tuning, and achieving consistent edits while preserving original image characteristics remains a persistent challenge with or without using masking techniques.

Qwen-Image and Qwen-Image-Edit (2509): Model Overview & Capabilities

Enter Qwen-Image-Edit. Released by Alibaba's Qwen team, these models represent a shift in open-source image generation and editing. Qwen-Image-Edit-2509 particularly stands out by incorporating functionality that traditionally required separate ControlNet models, while adding multi-image input capabilities that enable multimodal context.

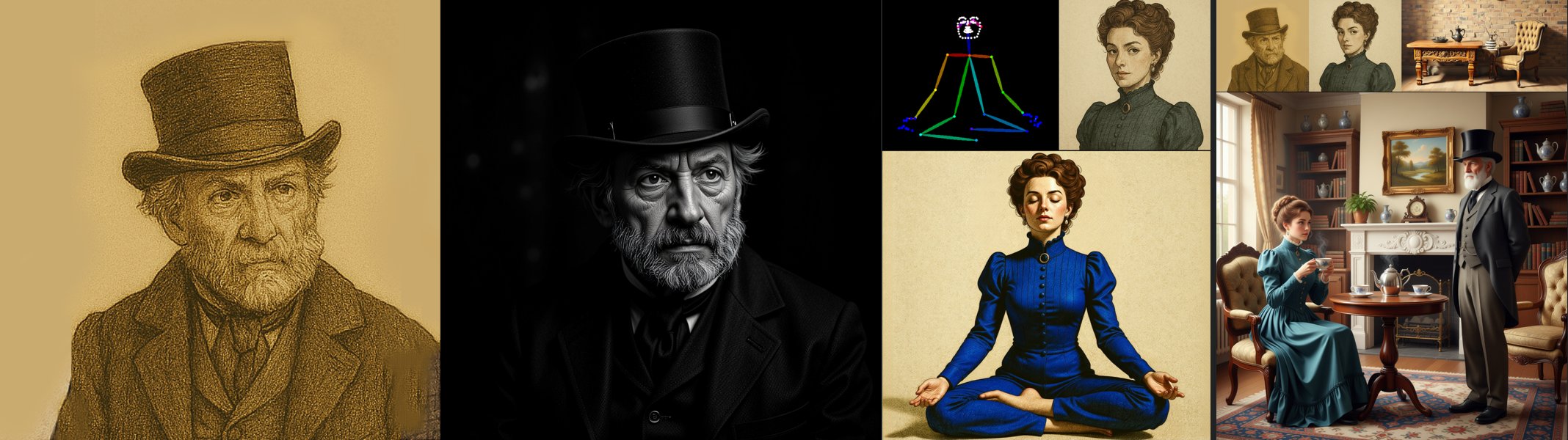

TIP: You may add Openpose image as one of input images, Qwen-Image-Edit (2509) will detect the pose (pose can be created from an image or in online pose editor for SD).

Technical Architecture: Why Qwen-Image Is Different

Qwen-Image represents a departure from traditional diffusion models. At its core is a 20-billion parameter Multimodal Diffusion Transformer (MMDiT) architecture that treats text and image information as equal contributors in the generation process, rather than the text-as-conditioning approach used by Stable Diffusion.

The diffusion transformer uses flow matching with Ordinary Differential Equations (ODEs) rather than traditional denoising diffusion. Text features are treated as 2D tensors and concatenated diagonally with image latents, enabling true multimodal interaction at every layer.

Multimodal Scalable Rotary Positional Encoding (MSRoPE) allows the model to understand spatial relationships between text and visual elements, allowing quite precise text placement and multi-object compositions. Qwen-Image-Edit uses parallel encoding pathways incorporating Semantic Path (Qwen2.5-VL) and Appearance Path (VAE Encoder): These representations are fused within the MMDiT through cross-attention, allowing the model to simultaneously understand "this is a person wearing a red shirt" (semantic) and "this specific shade of red with this exact fabric texture" (appearance). The 2509 update introduced 1-3 image input support, enabling workflows that previously required multiple ControlNet models.

ComfyUI Portable Installation

We will use ComfyUI portable for this example:

- From https://github.com/comfyanonymous/ComfyUI, download the portable version https://github.com/comfyanonymous/ComfyUI?tab=readme-ov-file#installing

- unzip the file into a folder

- IMPORTANT: We will need also Manager extension for portable ComfyUI from https://github.com/Comfy-Org/ComfyUI-Manager. Use "Installation[method2] (Installation for portable ComfyUI version: ComfyUI-Manager only)" option. This means, you will download install-manager-for-portable-version.bat into your main ComfyUI directory and run it to install the manager.

- GGUF support extension (this you may install when importing my workflow, or install it via Manager: ComfyUI-GGUF (GGUF Quantization support for native ComfyUI models).

Installation for Use with ComfyUI

Models

Depending on your intended use, you will need these files placed in proper folders. :

- GGUF Models (going to "\ComfyUI_windows_portable\ComfyUI\models\unet"): https://huggingface.co/city96/Qwen-Image-gguf, https://huggingface.co/QuantStack/Qwen-Image-Edit-2509-GGUF, https://huggingface.co/QuantStack/Qwen-Image-Edit-GGUF (older version)

- Encoders (to "\ComfyUI_windows_portable\ComfyUI\models\text_encoders") https://huggingface.co/unsloth/Qwen2.5-VL-7B-Instruct-GGUF/tree/main. I recommend to also test https://huggingface.co/QuantFactory/Qwen2.5-7B-Instruct-abliterated-v2-GGUF as it seems to adhere to prompt details better in gguf version.

- Vae (going to "ComfyUI_windows_portable\ComfyUI\models\vae") https://huggingface.co/QuantStack/Qwen-Image-Edit-GGUF/tree/main/VAE

- Lightning Lora (going to "\ComfyUI_windows_portable\ComfyUI\models\loras") https://huggingface.co/lightx2v/Qwen-Image-Lightning/tree/main

Workflows

I have prepared basic workflows for testing various scenarios. These are using GGUF versions of models, and you will find them here:

What Quantization to Use?

4-bit Q4_K_M and Q4_K_S seem to provide the best quality/performance ratio on lower VRAM (16GB).

Workflows Tips

You may use Qwen-Image-Edit-2509 model in Qwen Image workflows to generate an image without editing or multi-image inputs.

When using Lightning with GGUF models, experiment with steps, depending on a subject. Try the double of steps (7 or more steps for 4-step lora) to get more details, but high number of steps (like 50) do not contribute to details and produce artifacts.

Expect the outputs often default to Asian contexts, (especially when rendering older age groups or scenes from working environments) which is no surprise or an issue and can be remedied by LoRAs or stronger prompting if needed.

Inference Speeds

This table is for orientation only, the speed here is affected by your GPU and VRAM available (GGUF models used and image resolution). Note that generally the less steps means less details. Inference Speed for ~1 megapixel image (NVIDIA RTX 4000, 16GB):

50 steps: ~7 minutes

8 steps (Lightning): ~2 minutes

4 steps (Lightning): ~1 minutes

Experiment with various v1.0 and v2.0 Lightning Loras versions and steps to get ideal details and prompt adherence.

Errors

Error: TextEncodeQwenImageEdit mat1 and mat2 shapes cannot be multiplied (5476x1280 and 3840x1280)

This GGUF error may appear if you do not have a mmproj file (GGUF needs another mmproj model file in the same folder). Solution:

- 1. download the mmproj file:(https://huggingface.co/unsloth/Qwen2.5-VL-7B-Instruct-GGUF/blob/main/mmproj-F16.gguf)

- 2. rename it to "Qwen2.5-VL-7B-Instruct-mmproj-F16" (or "Qwen2.5-VL-7B-Instruct-mmproj-BF16" respectively) and put it in your encoder folder (the same folder you have the clip model you want to use)

- 3. update and restart. then you select your clip file in the node (not the mmproj) and the workflow should execute.

Detailed Info about Models

Qwen-Image

Released: August 4, 2025

Architecture: 20B parameter Multimodal Diffusion Transformer (MMDiT)

License: Apache 2.0 (fully open-source)

Key Capabilities:

- Superior Text Rendering: Excels at complex text rendering including multi-line layouts, paragraph-level semantics, and fine-grained details

- Multilingual Support: Exceptional performance in both alphabetic (English) and logographic (Chinese, Japanese, Korean, Italian) languages

- Diverse Artistic Styles: From photorealistic to impressionist paintings, anime aesthetics to minimalist design

- Seven Aspect Ratios: Supports 1:1, 16:9, 9:16, 4:3, 3:4, 3:2, 2:3

- 32,000 Token Prompt Window: Inherited from Qwen 2.5-VL backbone (vs. ~75 tokens in Stable Diffusion CLIP). However, in some sources there is recommended length of prompt 200 tokens (this discrepancy needs more research).

- Resolution Support: 256p to 1328p with dynamic resolution processing, trained aspect ratios from documentation: "16:9": (1664, 928), "9:16": (928, 1664), "4:3": (1472, 1104), "3:4": (1104, 1472), "3:2": (1584, 1056), "2:3": (1056, 1584)

Learn more details in references at the end of this article.

Qwen-Image-Edit

Released: August 18, 2025

Updated: September 22, 2025 (Qwen-Image-Edit-2509)

Key Capabilities:

- Precise Text Editing: Bilingual (Chinese/English) text editing with preservation of original font, size, and style

- Dual Semantic and Appearance Editing:

- Low-level appearance editing (add/remove/modify elements while keeping other regions unchanged)

- High-level semantic editing (IP creation, object rotation, style transfer with semantic consistency)

- Multi-Image Editing (2509 version): Supports 1-3 input images for person+person, person+product, person+scene combinations

- Enhanced Consistency: Improved facial identity preservation, product consistency, and text formatting

- Novel View Synthesis: 90° and 180° object rotation capabilities

- ControlNet Support: Depth maps, edge maps, keypoint maps, and more

Conclusion

Qwen-Image and Qwen-Image-Edit-2509 represent an advanced AI image generation system with great results, which not only match but exceed proprietary solutions in specific capabilities—particularly text rendering (vs. Adobe Firefly Image 4) and multimodal editing (compares great vs. Nanobana and others) . It runs locally on consumer hardware (8GB+ VRAM with GGUF; I suggest 16GB+). A wide token context enables great prompt detail and composition adherence. The outputs may seem a little bit sterile or plastic from the get-go, which can be remedied through workflows (e.g., staged edits) or specific prompt engineering and LoRAs.

Qwen-Image-Edit-2509's multi-image capabilities and natural language instructions fundamentally change the editing workflow, shifting toward semantic understanding over pixel-level constraints. You may leverage its image generation and editing via a single model in more effective and complex staged workflows which minimizes context loss compared to multi-model pipelines (the model supports several input images for editing, allowing semantic fusion (e.g., combining elements from multiple sources via natural language prompts like "replace the background with elements from image 2").

Is it better than Flux? The short answer: Yes. However, if you're doing occasional one-off edits, the Flux API might be easier or faster. For more complex tasks, the architecture of Qwen-Image-Edit-2509 has more potential and it is superior. Will it be successful? Possibly, but there are some caveats: It depends on the application and on whether it will attract LoRA trainers and community interest to improve the realism of renders (especially diversity and LoRA selection). For graphic design, it is a great tool and a very promising direction, especially considering its open-source ecosystem.

References

- Qwen-Image Technical Report https://arxiv.org/abs/2508.02324

- Qwen team research https://qwen.ai/research

- Qwen team https://github.com/QwenLM

- Connected papers

- GGUF support extension for ComfyUI https://github.com/city96/ComfyUI-GGUF

- https://github.com/ModelTC/Qwen-Image-Lightning

- My workflows and notes on github https://github.com/sandner-art/ai-research/tree/main/QWEN-Image-Edit