Upscaling Images With Neural Network Models on Local Machine

Machine Learning Models

The Neural Network model is trained on a vast image database. You can now download pre-trained models (or train one yourself) and experiment on a local machine. You will apply different models for different situations — some models are great for general photos or faces, some for drawings, landscapes, digital art, or camera noise removal. The technology is also capable of image restoration and adjustments. The AI model takes a while to process.

First, download and install chaiNNer (Download chaiNNer alpha here), head to github releases page https://github.com/chaiNNer-org/chaiNNer/releases

Choose an installation release for your OS or the portable version.

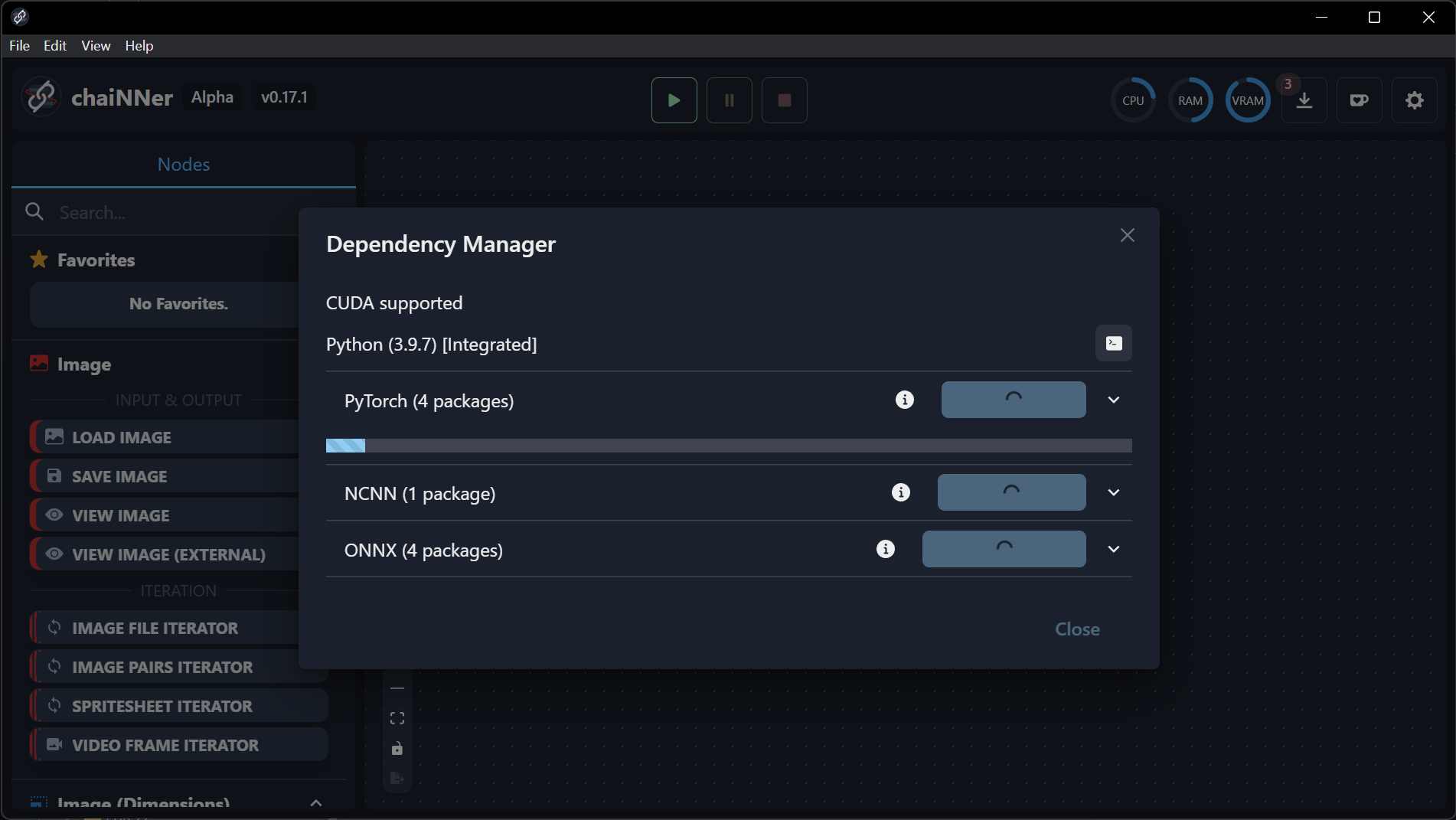

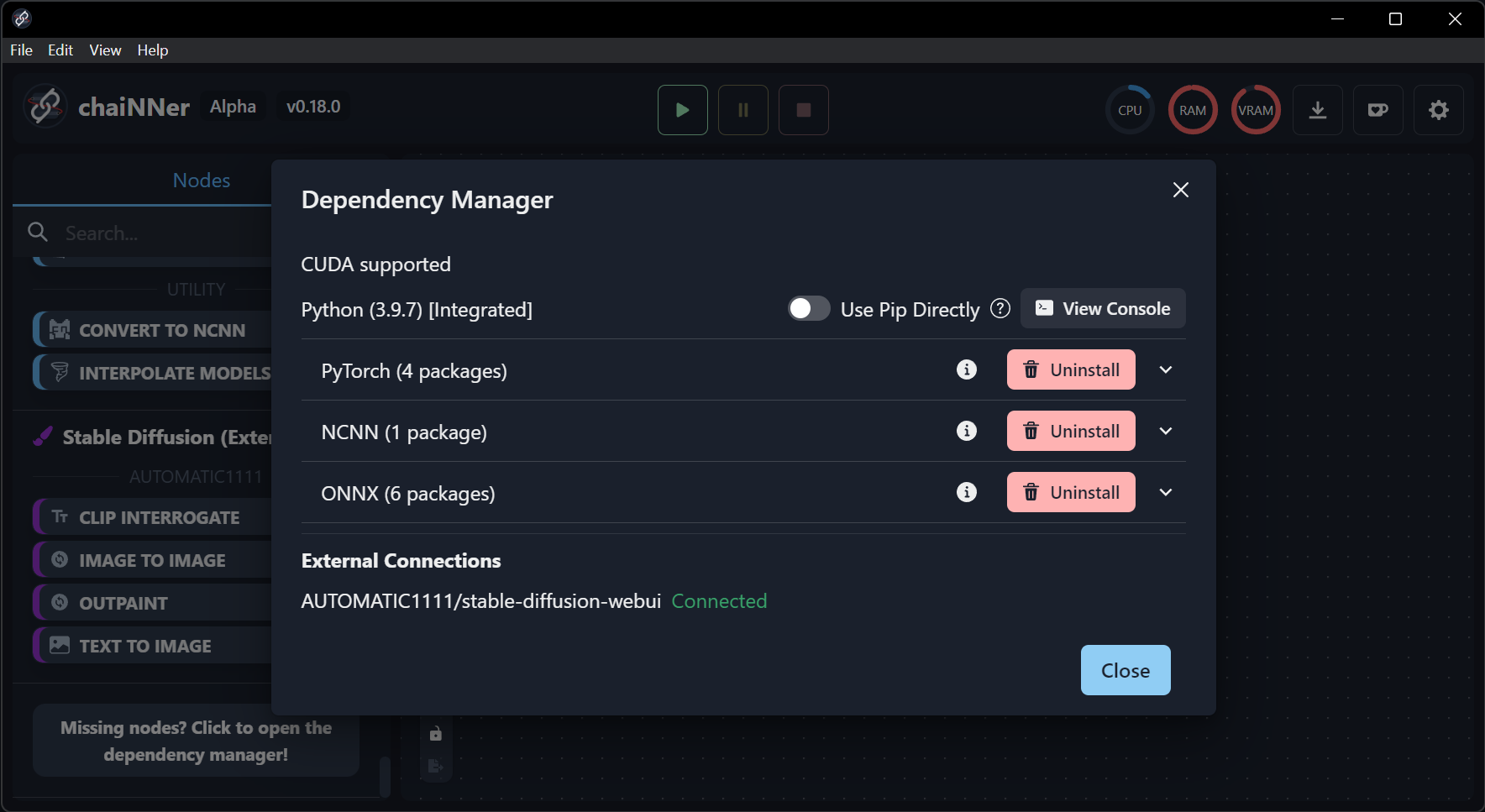

After running the program, check the needed dependencies from the top right icon (Manage Dependencies, usually with small number indicating dependencies to download) and install them for running AI models (installing dependencies will take some time). Pytorch is an open source machine learning framework, NCNN is a neural network inference computing framework, and ONNX (Open Neural Network Exchange) is an open format for Machine Learning models.

When reinstalling or updating to a new version, check and update those dependecies too!

After installation from the menu restart chaiNNer. Now you are ready to work with AI-trained models!

Models for Any Occasion

Download some models for testing from the database. Various models are trained for different applications.

Get .pth models from here Upscale Wiki (now OpenModelDB)

https://github.com/JingyunLiang/SwinIR Pretrained models releases: https://github.com/JingyunLiang/SwinIR/releases/tag/v0.0

Official research models: https://upscale.wiki/wiki/Official_Research_Models

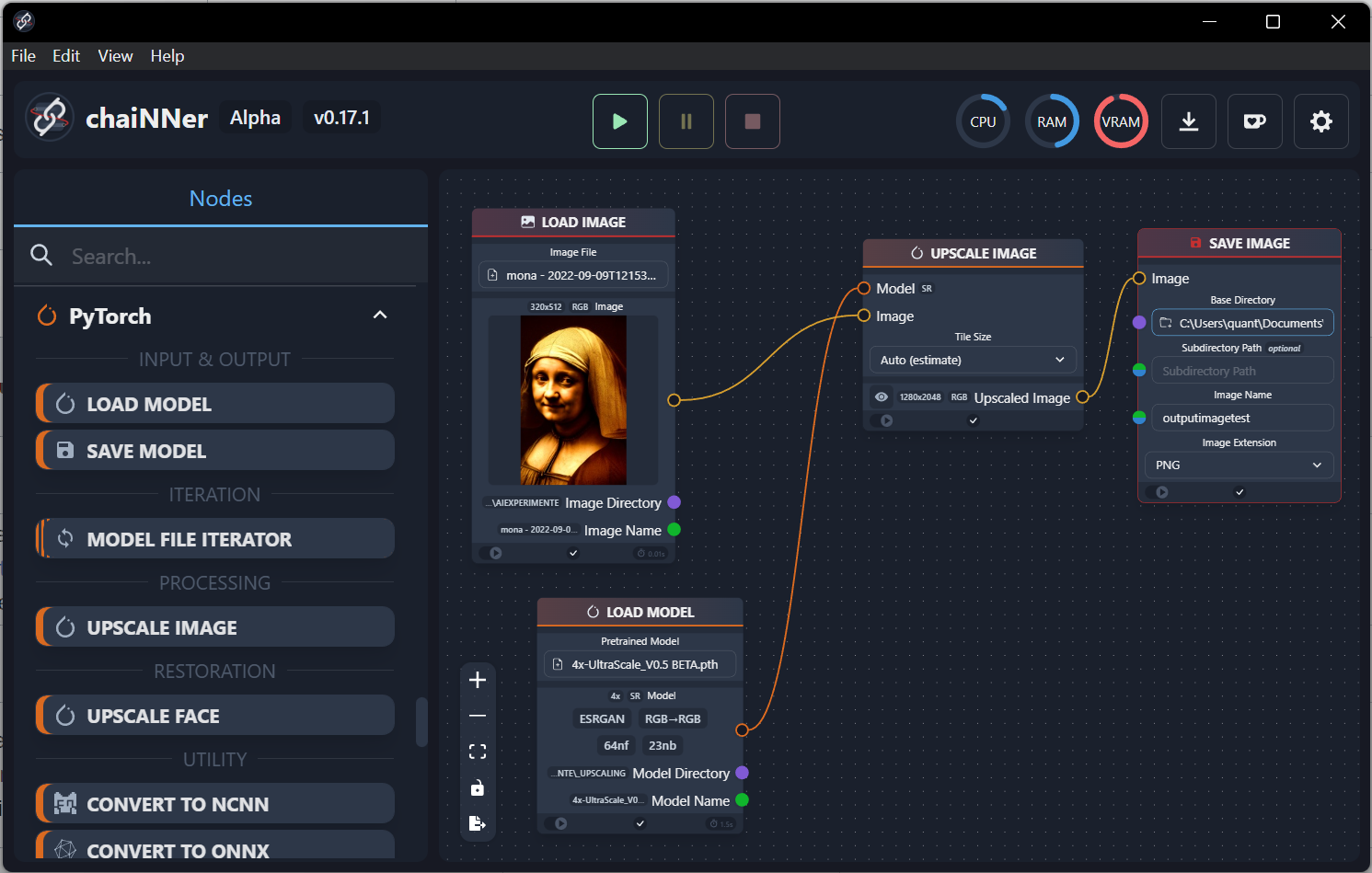

You may start testing with models like 4x-UltraScale9_V0.5 BETA.pth, 4x-UltraSharp.pth, 4xESRGAN.pth, Lady0101_208000.pth or 8xHugePaint_v1.pth.

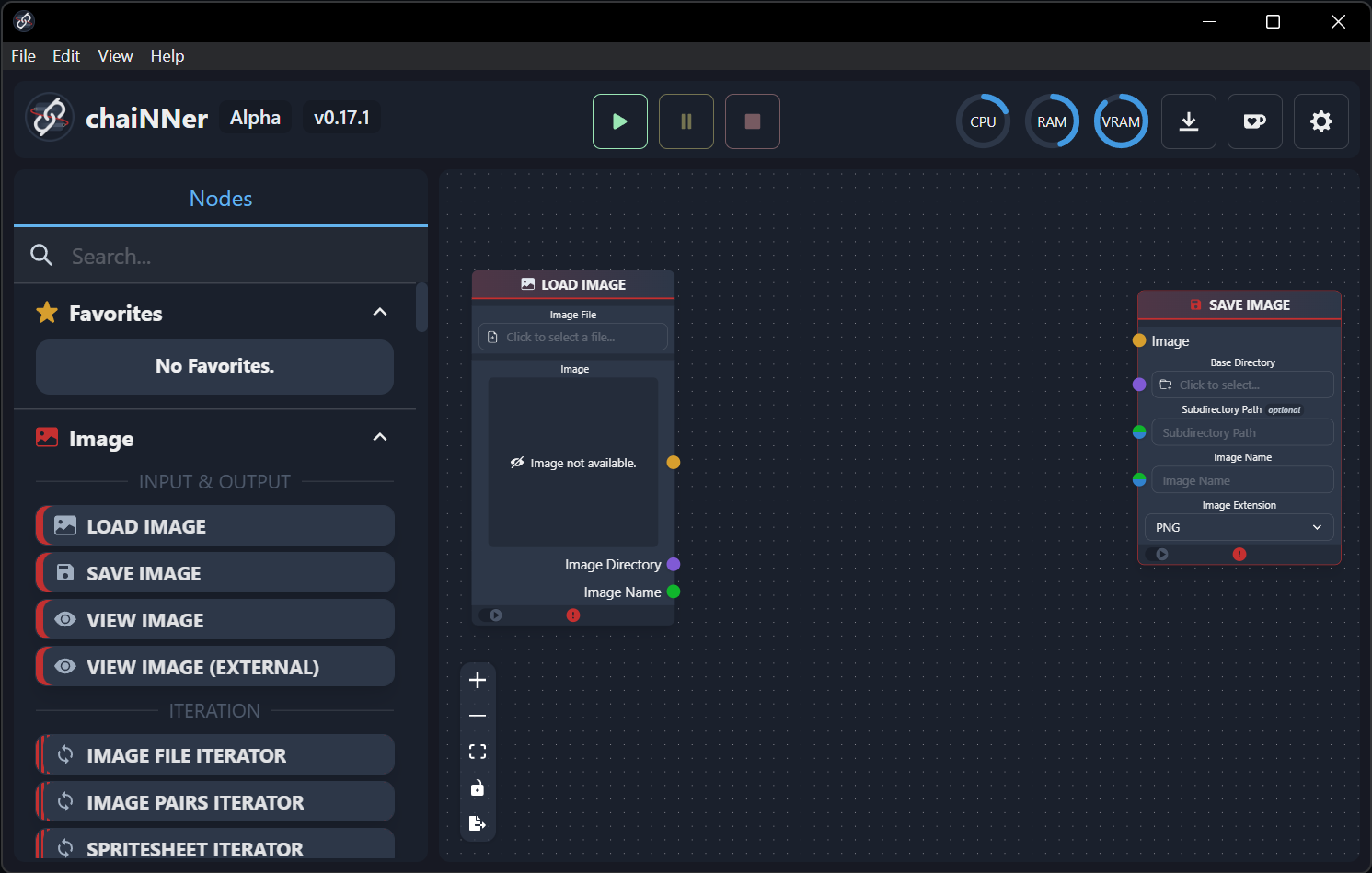

Simple Demonstration of Upscaling Single File

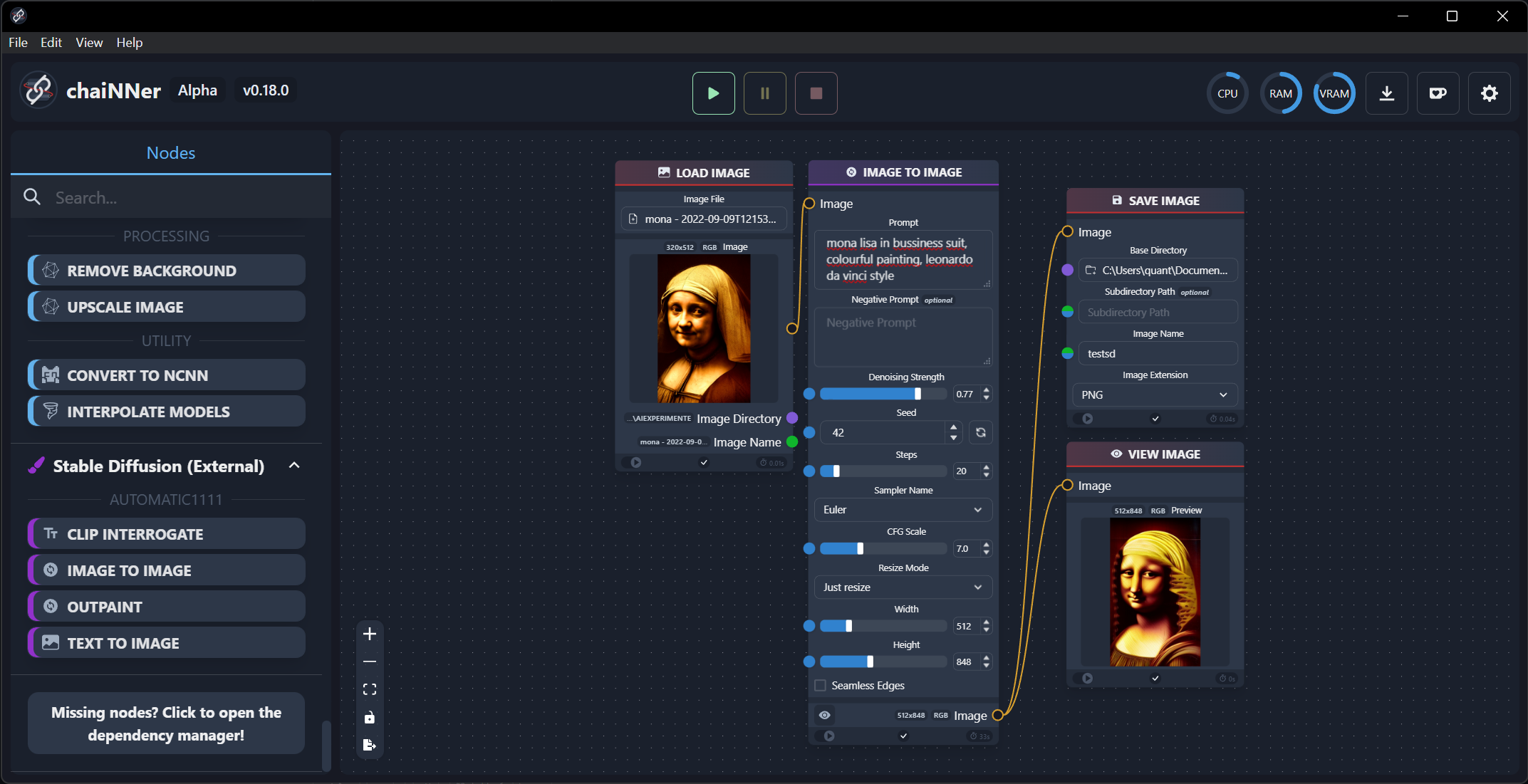

From side menu under Image stack submenu, choose Load Image and Save Image nodes (you may double click the or draw them on the workspace. The program is node-based and you will manage the processing by connecting the nodes.

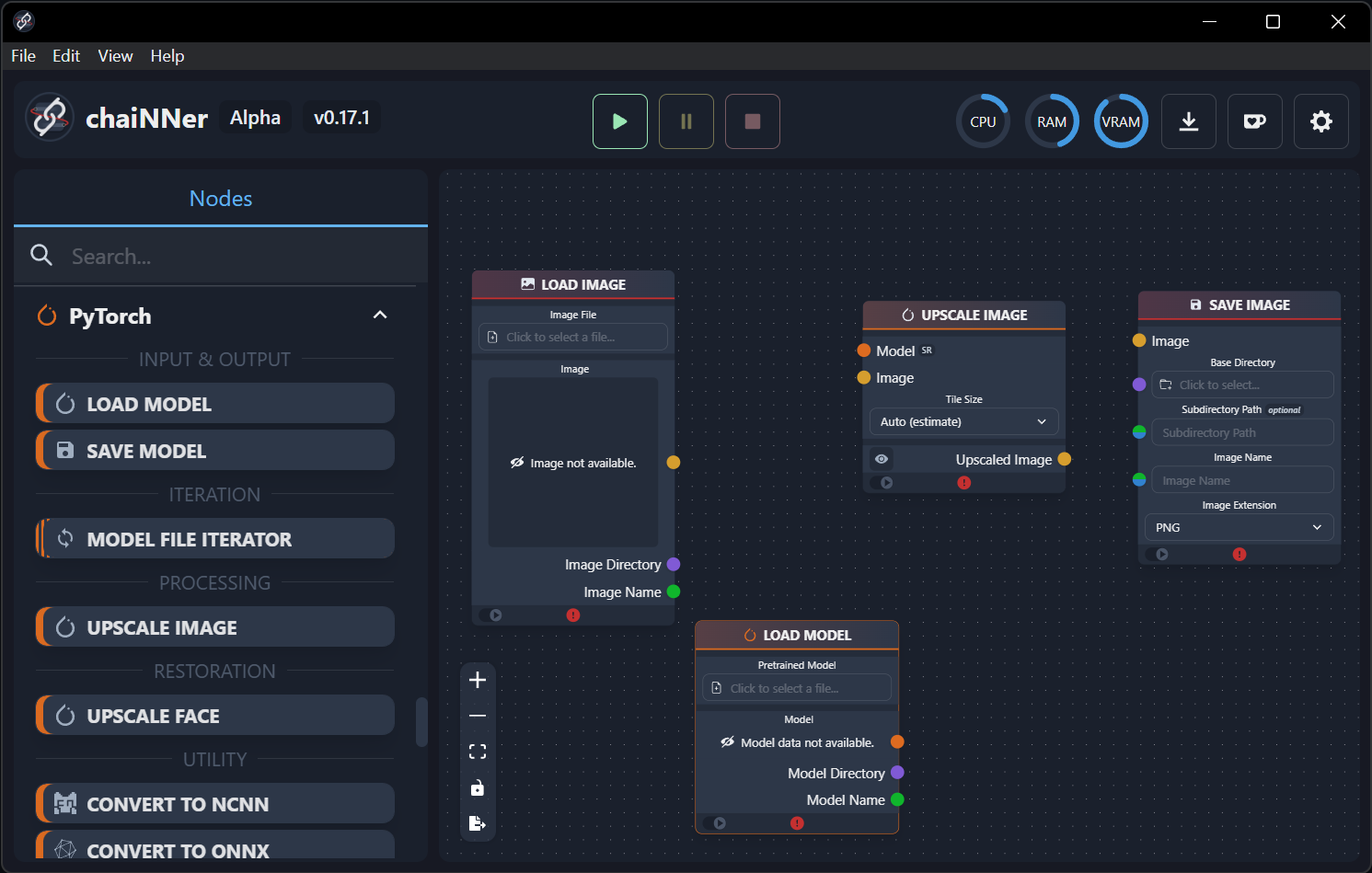

Scroll for PyTorch stack, choose Load Model and Upscale Image nodes and insert them in the same way.

Connect the inputs and outputs of all nodes.

- Import source image by clicking Image File inside Load Image Node.

- Load downloaded model (.pth) from inside Load Model node.

- Set up output directories in Save Image node, set Image Name

- To upscale your image, push the green play button on top.

The result is dependent on the specific model. Start your experiments with universal models, then proceed to specific applications.

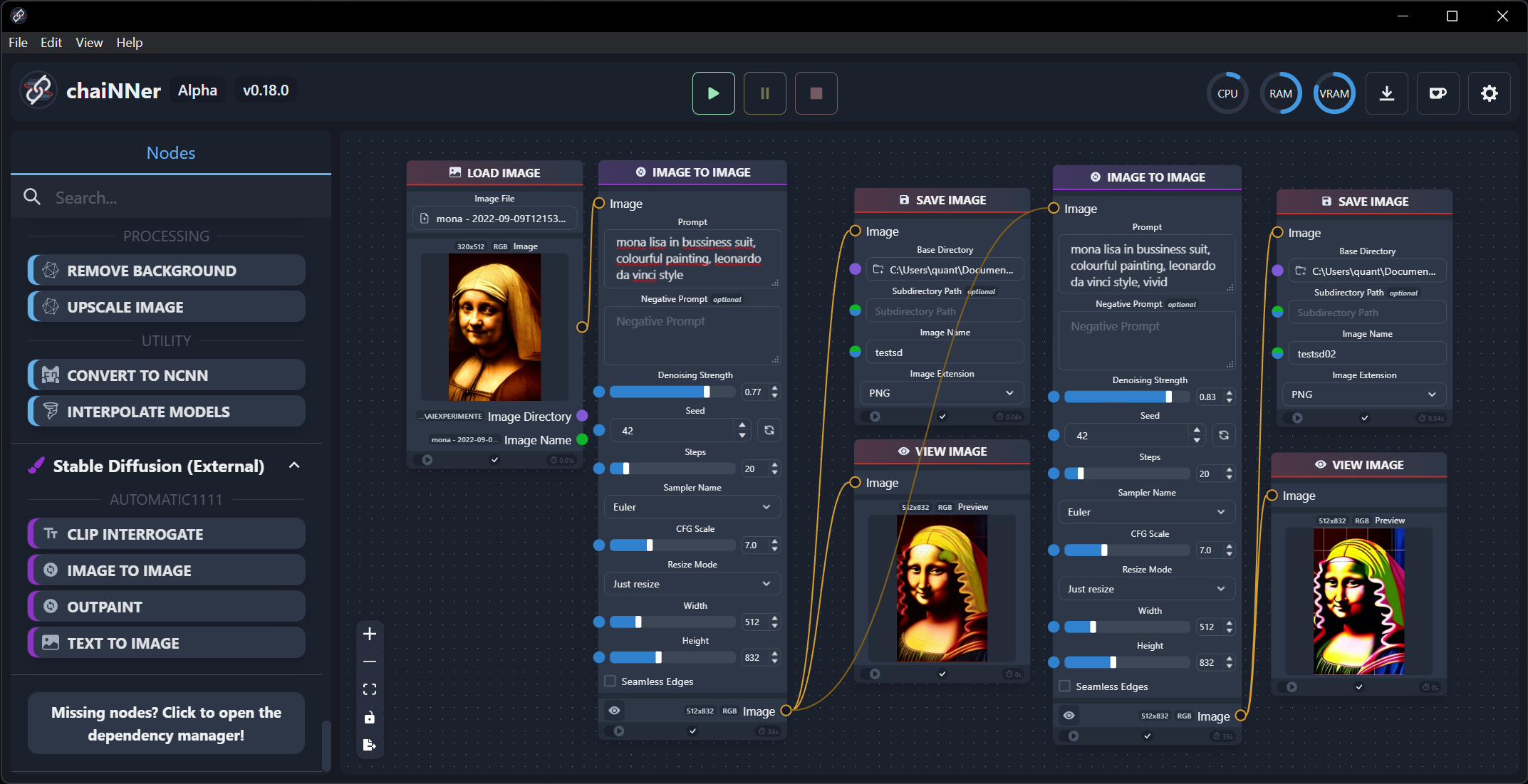

This is the simplest demonstration of the possibilities of chaiNNer. By using nodes and connecting outputs and inputs you can programm complex batch processing procedures.

Stable Diffusion in chaiNNer

Since version 0.18 you can use SD in chaiNNer via AUTOMATIC1111 webui API. Install AUTOMATIC1111 (check this article on running Stable Diffusion locally), then from its directory run webui.sh --api or webui.bat --api

Then start chaiNNer and new category appears in the left node menu (check Manage Dependencies, connection should be displayed as on the image above). Rendering is using weights model loaded in AUTOMATIC1111 webui. If you have any issues do not forget to start webui api first and then restart chaiNNer.

Conclusion

The advantages of this technique are flexibility and a programmatic approach to image management, upscaling, and enhancement. Professional graphic cards also offer lightning-fast output, even when compared to standard image resampling. The node structure design is also easy to control and allows many experiments with image and video frames with outstanding results.

Generative Adversarial Networks Notes

GAN (Generative Adversarial Network) is a type of deep learning model that consists of two neural networks: a generator and a discriminator. The generator is trained to produce realistic samples of data (such as images or text) from random noise, while the discriminator is trained to distinguish between the generated samples and real samples from a dataset.

ESRGAN (Enhanced Super-Resolution Generative Adversarial Network) is a specific type of GAN that is designed for image super-resolution and has shown impressive results in this domain.It uses a similar structure to a typical GAN, but with modifications to the generator architecture to specifically address the problem of super-resolution outputs from low-resolution inputs.

Stable diffusion is generative technique used in image synthesis that involves optimizing a noise field over time to gradually reveal a detailed image (which can be stylized or photorealistic depending on the training dataset model). The technique works by adding Gaussian noise to the image and then gradually reducing the noise over time to reveal more and more of the underlying structure. The diffusion process is guided by a convolutional neural network (CNN) that extracts features from the image and uses them to control the diffusion process. It is more stable than other approaches to image synthesis, such as texture synthesis and style transfer, as it avoids the issues of overfitting and instability that can arise in these methods.