Cosine-Continuous Stable Diffusion XL (CosXL) on StableSwarmUI

Cosine-Continuous Stable Diffusion XL is a new stability.ai experimental SD model. The most notable feature of this schedule is its capacity to fix tonality issues with SD models and produce the full color range from 'pitch black' to 'pure white', performing much better than noise offset or LoRA solutions.

You may use CosXL (Edit) model in conjunction with Flux to achieve cinematic results.

'CosXL 1.0 Edit' also uses a Cosine-Continuous EDM VPred schedule. It takes a source image as input alongside a prompt, and interprets the prompt as an instruction for how to alter the image (instructed image editing, InstructPixtoPix Conditioning—you can remember an early model for SD using a similar approach).

We will need ComfyUI or StableSwarmUI to use the test workflows. If you are ComfyUI user, you may jump to downloading models and workflows.

Setup of StableSwarmUI in Windows

Instalation and work with StableSwarmUI is very easy even if you never tried it before. You will need around 27 GB of free space (~7GB for SDXL base + 2×~7GB for CosXL Models + other software downloads).

- Create a folder where you want to install StableSwarmUI

- Download the batch file into the folder and run it. Everything should install automatically.

IMPORTANT!: The batch file installs the needed software, which is specifically: Microsoft .NET SDK, GIT for Windows, ComfyUI backend. StableSwarmUI Installer then finishes in browser by downloading SDXL base model 1.0. I recommend to let it download this model even if you have it.

How to Set Custom Lora or Model Paths

This is for slightly advanced users, you do not need to take this step if you just want to test StableSwarmUI with the basic models. In 'Server' tab, 'Server Configuration', you can set it for custom paths. Use a full-formed path (starting with '/' or a Windows drive like 'C:') to use an absolute path. Example: C:\SDXL\stable-diffusion-webui\models\Lora (if you want to use your LoRAs from another SD UI instalation). Alternatively you can change the path for a model folder in the main path (C:\SDXL\stable-diffusion-webui\models), and then use models from these subfolders as defined in the configuration. Restart server afterwards.

Cosine-Continuous Stable Diffusion XL Download

You will find the models here https://huggingface.co/stabilityai/cosxl/tree/main , you will need to agree to the license (or sign up) first.

Download both 'cosxl.safetensors' and 'cosxl_edit.safetensors' files to "YOUR_PATH\SWARM\StableSwarmUI\Models\Stable-Diffusion". Do not create subfolders, just put them into the models folder. If you have changed paths to your custom models, put it there.

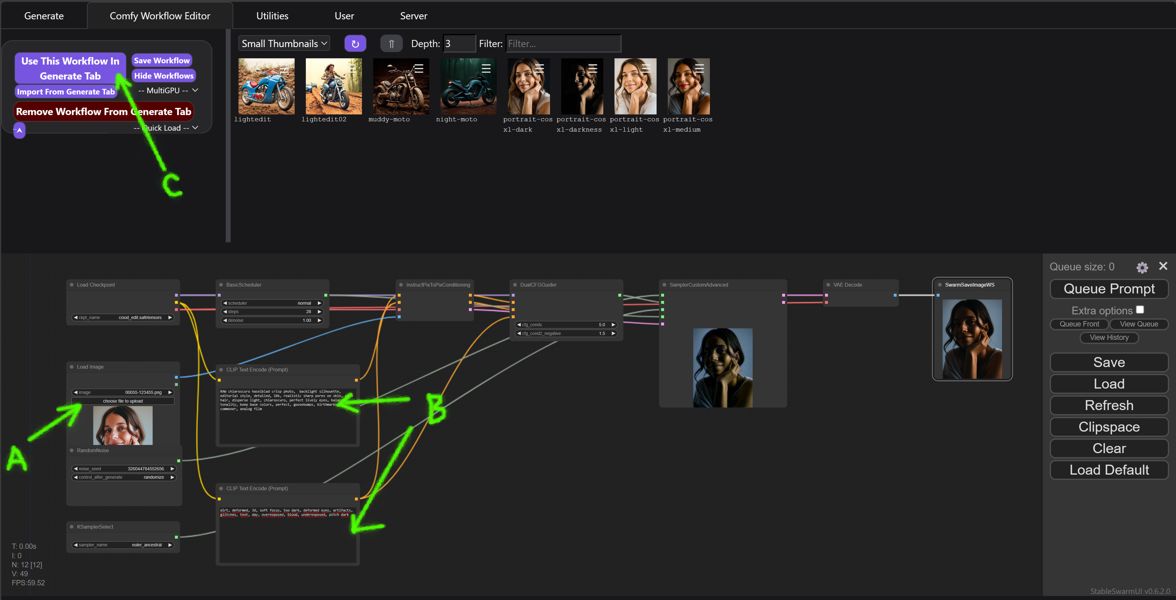

Setting Comfy-UI Workflow

Open 'Comfy Workflow Editor' tab. To simply insert workflow, you can either:

- Drag and drop .json file with a workflow in the window

- Drag and drop image file with a workflow

Load any image as a start point for editing (A), then edit prompts (B) and other settings in the node workflow (CFG and steps are self explanatory). You may test the workflow with 'Queue Prompt' button, and wait until the rendering finishes. If you are happy you may switch to Generate tab when you copy your workflow setting with 'Use This Workflow In Generate Tab' button (C).

You may download the test image with workflow here or my test workflows for art and photography. While you have StableSwarmUI running, use the image to load workflow by drag&drop as described above.

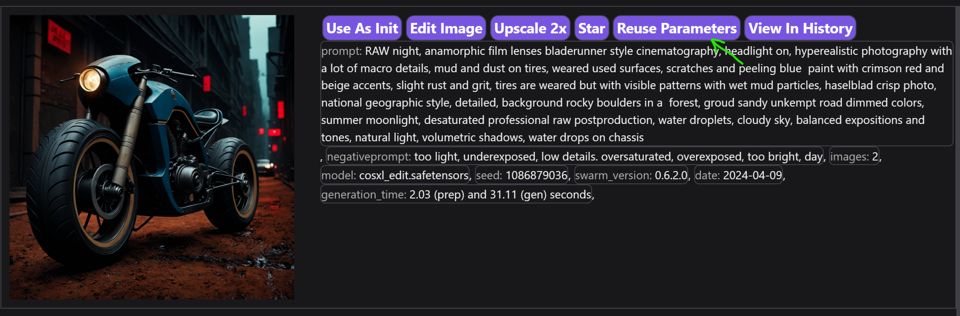

You can also reuse parameters (like a prompt) while keeping the workflow. You will find test files here . An image you generate in StableSwarmUI will save with parameters. Drag and drop an image in the main Generate tab window. Click 'Reuse Parameters' and the parameters will load from the image.

Methods I Was Using for This Preview

Cos Stable Diffusion XL 1.0 Edit Model

- For editing an image, similar to img2img with some ControlNets applied (but simpler and faster)

- It is using also the content of the image as an input

Cos Stable Diffusion XL 1.0 as a Regular Checkpoint

- When you use it in the provided workflow, it will use the lead image in a way similar to ControlNet IP Adapter: the image will inspire the output, but unlike the Edit model the final image have a different composition.

- similar prompting to original SDXL or SDXL base+refiner workflow

- You may use CosXL just as a checkpoint without a leading image (I edited simple CosXL-BASEONLY-workflow which you will find in References links)

CosXL Edit and Base Tips

- Using negative prompt seemed to be very important (in contrast with latest SDXL fine-tuned models)

- Start with general style prompting, then add image specific changes (as mud on tires on the example image above)

- Use a tonally interesting image as a lead file for the base model

- Models struggle with body and face proportions similarly like most hires models. You can control this partially with clear lead image or a clever prompt

- You can change resolution bypassing leading image resolution (which makes sense only for the main base CosXL.safetensors checkpoint). There is simple workflow in the links at the end of this article (CosXL-BASE-resolution-workflow).

Because the model takes into account what is already in the image, the result can be very good when altering the scene.

Conclusion

CosXL Edit outputs are truly impressive. The edit model reacts to even inept prompting and outputs very detailed, tonally rich images. It maintains image consistency very well, I would say even better than ControlNet in many cases. It stumbles a little bit with portraits, but considering the experimental nature of the model the results are good. The speed is also very reasonable.

Overall, CosXL Edit is an interesting experimental tool that has the potential to find a place in photo or design studios in its future iterations (although the current model prohibits commercial use, it allows artistic or scientific research). If you're looking for something to try while waiting for SD 3.0, this is a great option!

StableSwarmUI (a user interface for SD with ComfyUI backend) is also worth checking out, especially if you're interested in learning ComfyUI workflows, which are great for comparative research in image generation. While StableSwarmUI might not be ideal for daily use (Forge or A1111 might be simpler choices for that), it can be a valuable tool for bulk edits, experiments, and even spark inspiration for testing new techniques, especially in combination with new models.

References

- Cos Stable Diffusion XL 1.0 and Cos Stable Diffusion XL 1.0 Edit https://huggingface.co/stabilityai/cosxl

- You may find my test workflows and parameter images on my github https://github.com/sandner-art/ai-research/tree/main/CosXL-Workflows.

- My parameters test images https://github.com/sandner-art/ai-research/tree/main/CosXL-Workflows/CosXL-Parameters-Images

- My experimental Cinematix CosXL merge https://civitai.com/models/396070/cinematix-cosxl-edit . If you do not have a Civitai account, you may use the link https://civitai.com/login?ref_code=AIR-XIP to create an account to get some free generation/training credits for a start.