Face Models Without Training: Instant ID in A1111 ControlNet (ReActor Comparison)

Image synthesis of faces usually requires some fine-tuning methods. We have various techniques to achieve face resemblance in the final image, often employing extensive training of models. Except for now standard training of Low-Rank Adapter models (LoRAs) usually from several (5-25) source images, some solutions needed only one source image. Such solutions have drawbacks when synthesizing unusual face angles or using masks interfering with the diffusion process.

Instant ID is tuning-free method to transfer face features and is inspired by IP-Adapter solution for ControlNet.

Installation

Update your ControlNet in A1111. Now there is an option to select Instant ID, Image Adapter and Processor. You will need to download two .safetensors models and RENAME them (you may save them as the needed name in download dialogue). Download both models into your A1111 /models/ControlNet directory.

- Contolnet pytorch model rename to

control_instant_id_sdxl(it will keep .safetensors if you change the name during save) - ip-adapter.bin rename to

ip-adapter_instant_id_sdxl(it will keep .bin extension if you change the name during save) - Restart A1111

The models are quite large, be prepared to download around 4GB of data.

How to Use Instant ID

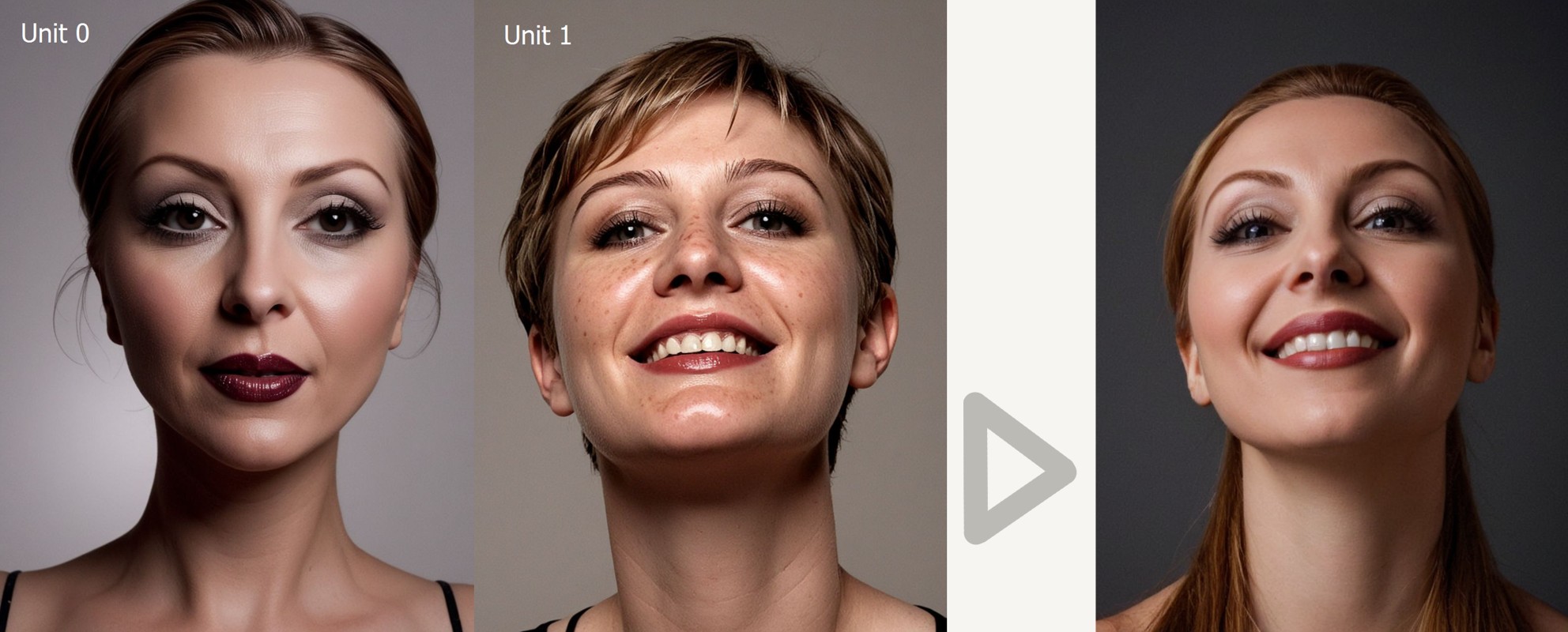

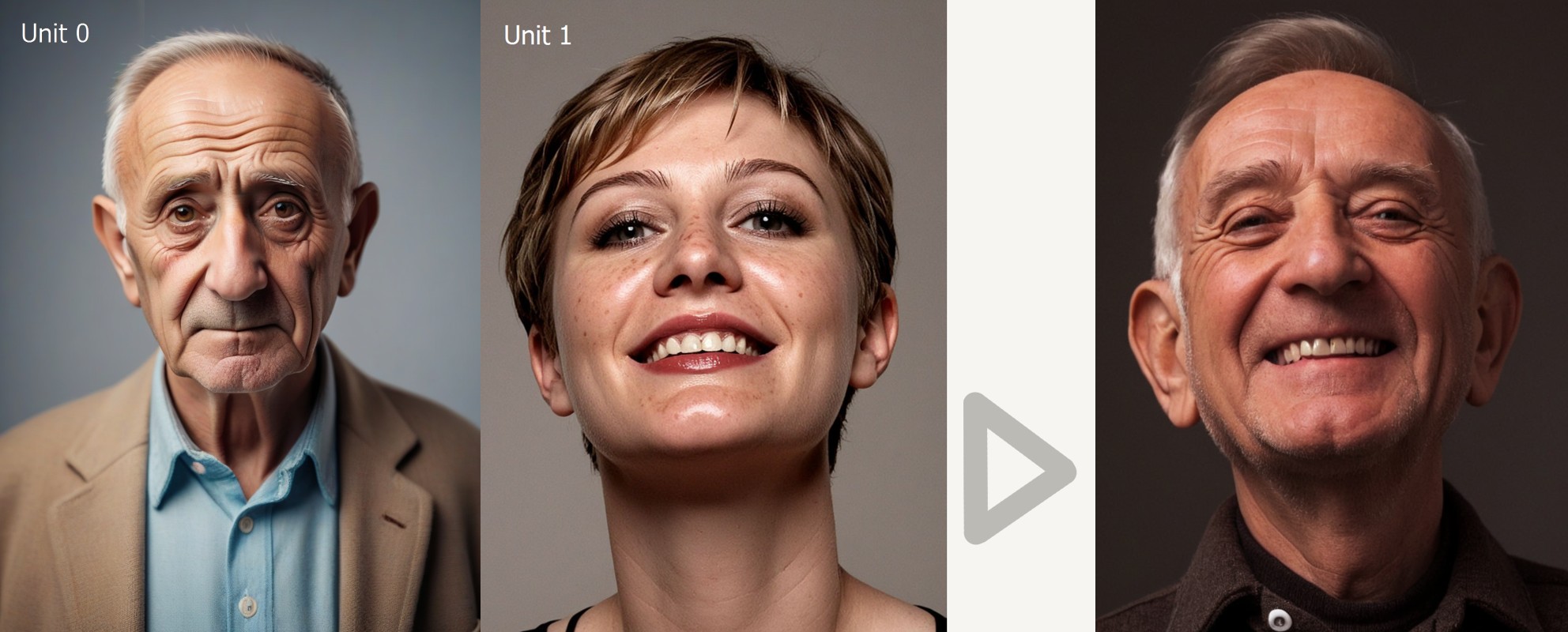

This is slightly more difficult than usual ControlNet system, at least for now. You will need to setup two ControlNet units as follows:

- ControlNet Unit 0: Preprocessor (instant_id_face_embedding), Model (ip-adapter_instant_id_sdxl)

- ControlNet Unit 1: Preprocessor (instant_id_face_keypoints), Model (control_instant_id_sdxl)

- You may use mnemotechnic embedding/adapter, keypoints/control

Image in Unit 0 will be source of facial features. ControlNet Unit 1 will control size of face and its angle in the output image.

Important Tips

Sometimes when using wrongly cropped images, the transfer will fail:

- reference image should not be tightly cropped, jaw and a neck should be visible

- the second image is defining the position and the size of the head in the image

- source image must have defined volume, do not use flat light images

- use sufficient resolution for source images (512-1024)

- use usual resolutions for your SDXL model to avoid distortions

- in 1024 resolution the model tend to produce fake signatures and artifacts, use lower or higher resolution if you encounter this in your model

I am using IP-Adapter style image as the third ControlNet Unit 2 to get nice results, but I guess you can get similar results with clever prompting and styles.

I set Pixel Perfect in ControlNet, and Controlnet is more important setting.

For Cinematix SDXL model, I set CFG as low as 2, steps 12-18. This may differ in other models, but I guess the principle with lower CFG and steps will be the same.

Comparison of Instant ID and ReActor

Although ReActor can have very good results with enface transfers, its usability at various angles is limited (also some making pixelation artifacts can occur). This is where Instant ID shines, however the resemblance to the source is not allways perfect.

Overall, ReActor (and similar solutions) is very fast and practical, Instant ID wins with superior quality and flexibilty—but is substantially slower.

Conclusion

Instant ID is usable for SD1.5 and SDXL for transferring synthetic faces and the results are great. Now is only the SDXL version available for the workflow described in the article. The technique is effective for producing synthetic faces for diffusion model training. Reproducing faces of real people is often ethically dubious, however, the technique can be used for forensic facial reconstructions on images or hiding identity on images for training. This article and review will be updated as more effective versions of Instant ID will emerge.

References

- Instant ID project ControlNet https://github.com/Mikubill/sd-webui-controlnet/discussions/2589

- Instant ID https://github.com/InstantID/InstantID

- InstantID HuggingFace https://huggingface.co/InstantX/InstantID

- Research Paper InstantID: Zero-shot Identity-Preserving Generation in Seconds,

authors Wang, Qixun and Bai, Xu and Wang, Haofan and Qin, Zekui and Chen, https://arxiv.org/abs/2401.07519