Latent Consistency Models (LCM) and LCM LoRAs: Faster Inference for Experiments and Animations

Latent Consistency Models are models "distilled" from base checkpoints into versions able to generate image with fewer inference steps (sometimes referred to as 4-step generations). The process requires a substantial amount of resources. In this article, we will take a look at another novel approach to LCM, LCM LoRA (and its use in A1111).

UPDATE: You may download and test LCM a new merged model: Photomatix v3-LCM

What is Latent Consistency Model (LCM)?

How does it work? It enables fast inference with minimal steps on any pre-trained LDMs (Latent Diffusion Models), solving augmented probability flow by predicting the solution of reverse diffusion in latent space (see the paper in References). Simply, it is using a specially trained (distilled) latent diffusion model to create swift inferences.

You may also test the distilled LCM model in LCM sd-webui-lcm extension (install from Extensions tab, requires ~4GB download). However, we will now take a different route.

LCM LoRAs: Test LCM With Any Model

The good news is, we can use LoRAs for the job. LCM LoRA uses training of a small number of adapters (layers), which can then be applied on any model. We can use the pretrained LoRA (download it below) or train one yourself (see References).

Installation of LCM LoRA is the same as with any LoRA. In A1111, download .safetensors file it into the "\stable-diffusion-webui\models\Lora" folder (rename the file f.i. LCM.safetensors for 1.5 version and LCMSDXL.safetensors for SDXL). Use it in prompt and you are ready to go.

- SD https://huggingface.co/latent-consistency/lcm-lora-sdv1-5/tree/main

- SDXL(exper.) https://huggingface.co/latent-consistency/lcm-lora-sdxl/tree/main

IMPORTANT NOTE: You may experiment with SDXL LoRA, but the results are very low quality in A1111 (you may rather test it with ComfyUI workflows). In A1111, SDXL LCM Lora may not show in the SDXL networks list, if this is the case use direct name of the file in the prompt, f.i.

portrait <lora:pytorch_lora_weightsSDXL:1>

NOTE: Use CFG scale 1-2.5

Recommended Samplers

DPM++ SDE Karras,Euler a,Euler,LMS,DPM2,DPM++ 2S a,DPM++ SDE,DPM++ 3M SDE,DPM adaptive,LMS Karras,DPM2 Karras,DPM++ 2S a Karras,DDIM,UniPC

If you have AnimateDiff installed, you may also experiment with LCM sampler to remove some artifacts in animations (needs more steps). LCM sampler is a good option for hires fix in LCM checkpoint models.

How to Use It With ControlNet

You may adjust the ratio "Steps/CFG Scale" higher than in txt2img alone. As you go higher with CFG, add the steps accordingly (f.i. CFG 7.5 creates a decent image with 20 steps). The example is using Softedge control type.

Pros and Cons

- good for fine-tuning prompts

- speed gain is considerable

- allows use of other LoRAs

- noise artifacts and banding can occur, requires adjustment of workflow

- sometimes inconsistent results

- limits creating variations via steps, less details

Using a low CFG Scale is a downside, but even with the usable values (1-2), the model seems to react quite well to prompt tokens. Some noise artifacts and speckles can be caused by intensity distribution in layers during inference, and they can be removed by a high-res fix pass (using a non-LCM checkpoint).

Can You Combine It With NVIDIA RT Models?

Yes. You can use all advantages from NVIDIA RT technology. It is fast.

Use Cases and Perspectives in VFX

Fast inference is intended for quick, near-real-time rendering of SD models. Latent Consistency Models can be used for rapid experimental rendering even in the current version. Below, you may see the AnimateDiff experiment (without ControlNet) with the LCM model (more in the next article on Temporal Consistency):

While the technique is still in its early stages, it is evident that there is great potential for applications in video and VFX. Even with an extra step with upscaling (or Hires fix) in video creations, you will still benefit from a fast preview generations.

Note: You can not use NVIDIA RT SD Unet with ControlNet and AnimateDiff techniques yet.

Conclusion

LCM currently allows to significantly reduce number of steps for image generation. The speed gain is notable and you can get usable results. SDXL version does not perform well in A1111, however considering the novelty of LCM models we can expect to get better versions soon.

References

- LCM models on https://huggingface.co/collections/latent-consistency/latent-consistency-models-loras-654cdd24e111e16f0865fba6

- LCM LoRA Technical Report

- Training LCM LoRA blog article

- LCM Models paper

- Consistency Models paper

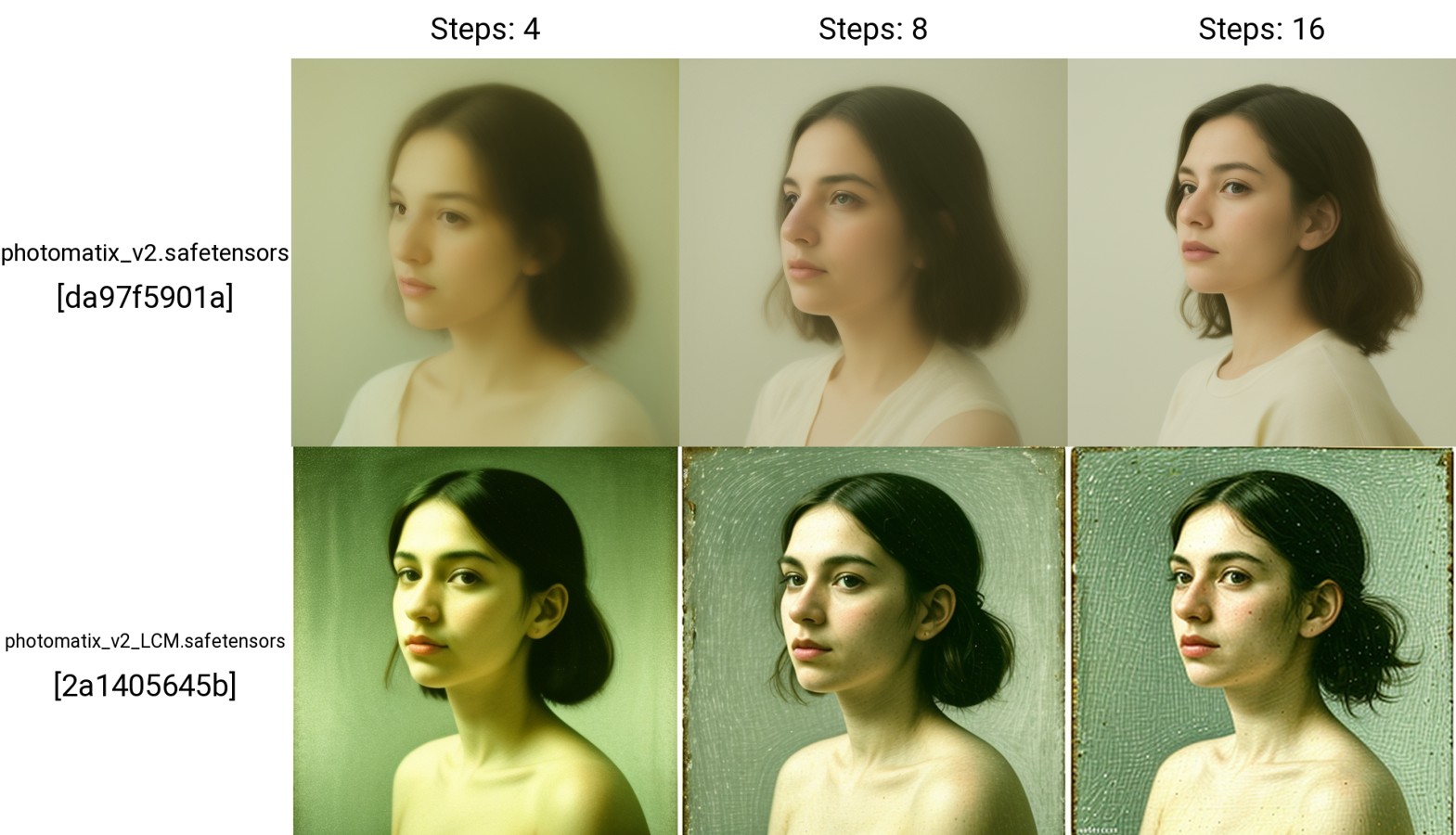

- SD model used for testing and illustrations: Photomatix v2/v2-LCM