Stable Diffusion 3.5 (Large): What You Need to Know

Good news, everyone! A new Stable Diffusion model has arrived! And it is good—with some caveats.

Stable Diffusion 3.5 Large is an 8-billion-parameter Multimodal Diffusion Transformer (MMDiT) text-to-image generative model by Stability AI. It features many improvements and a solid architecture. As a large-sized model (with a medium-sized version to be released soon), it is a strong contender in the field of open models—at least parameter-wise. But how does it really perform?

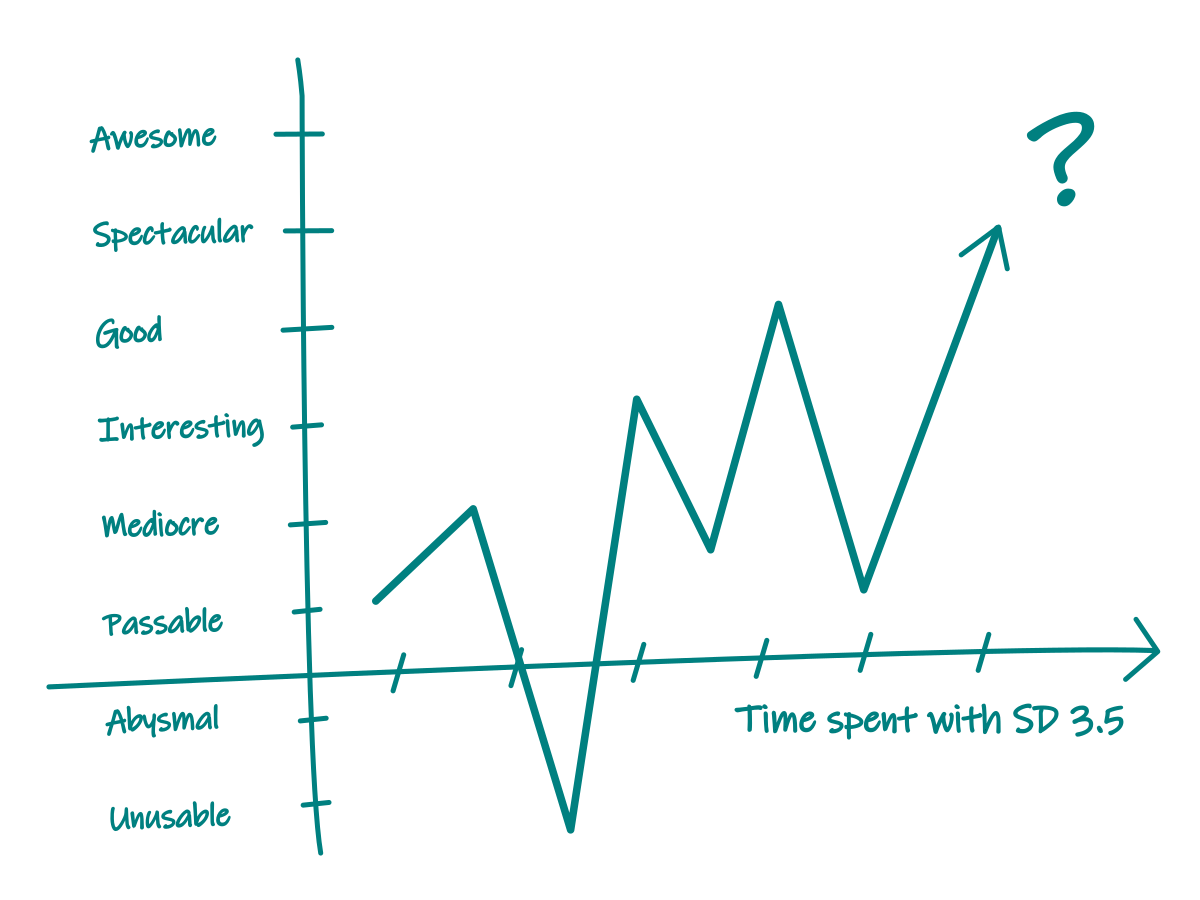

Here is the scientifically approved curve of hope and disappointment that you may experience during the testing of SD 3.5 (Large):

In this highly sophisticated graph of my research, you may notice some bumps. Why is this happening? The SD3 model has certain peculiarities related to its design and training, which may be incompatible with the tricks and workflows you happily use with other models. Here are some downsides you will inevitably run into with the Large model (it is better to list these right at the beginning):

- Resolution limit: There is an upper limit and a lower limit beyond which the model starts to break, resulting in artifacts, generating random styles, or partially ignoring most of the prompt. The range seems to be around 960-1152 for square resolutions. Stability AI recommends a total resolution of 1 megapixel and dimensions divisible by 64. However, I have also created nice images at irregular resolutions like 800x1152. See workflows for examples.

- Forget about effective upscaling workflows with the SD3 Large model. Everything over the effective resolution range becomes noisy and heavily pixelated. However, this issue may become a feature with a (possible) future tiling IP adapter.

- Eyes. Hands. Often in portraits, the model produces a very nice facial expression and composition, but hands and object consistency are beyond repair. Given the limited range for high-resolution fixes or img2img approaches, this may create the initial false impression that the model is generally poor.

- Speed (this one is quite relative; more on that later in comparison with Flux).

- Finnicky prompting and wandering styles. But this could be said, more or less, about any generative model.

Why Stable Diffusion 3.5 Is Actually Great

With that said, SD 3.5 is a fun model to play with. It can output high-quality images and styles straight out of the box. Good points are:

- Many (or most) of the mentioned issues can probably be solved by custom training and fine-tuning.

- Simple workflow (well, except for the contrived negative prompt).

- It may not seem at the first glance, but it actually performs pretty well.

- Great for stylized images (and huge number of lightting styles for photos) with some prompt engineering magic

- The outputs are very diverse and produce a wide range of realistic characters, without the strong face type bias seen in previous SD models or Flux.

- Greater variation from the same prompt with different seeds (this can take some time to get used to)

- The model is ready to be trained

- The model can often handle quite convoluted (read: sloppy) prompts. I would not necessarily call it a generally good adherence, but still.

- It is a new model, but it retains familiar Stable Diffusion traits (similar to Flux, SD styles quite work)

- The low-step Turbo version is actually very usable.

- It is a great and very much needed competition on the field of open generative models.

There is another (SD 3.5 Medium) version released, with max resolution up to 2 megapixels.

Download and Installation of SD 3.5 Large and Turbo

You can download just this version, containing all needed text encoders (I am using it in the most examples):

- sd3.5_large_fp8_scaled.safetensors https://huggingface.co/Comfy-Org/stable-diffusion-3.5-fp8/tree/main

- GGUF (smaller, quantized) versions https://huggingface.co/city96/stable-diffusion-3.5-large-gguf/tree/main , https://huggingface.co/city96/stable-diffusion-3.5-large-turbo-gguf/tree/main

Place sd3.5_large_fp8_scaled.safetensors it into your Stable Diffusion model folder in Comfy UI. Forge is not yet supported (at the time of writing this article).

There are text encoders in the text_encoders folder. You may download them to models/clip. Normally the model uses clip_g, clip_l, and (you may experiment with other text encoders instead of clip_l models in a workflow).

Comfy Workflows

There are two types of example workflows: one (sd35-scaled-sai-negprompt.json) uses a limited negative prompt (SD 3.5 has issues with CFG in negative prompts, so it must be watered down during generation), and the other (sd35-scaled-test.json) uses an empty negative prompt. You will find these SD 3.5 Large workflows on GitHub (you may use the original examples; these are just modified with some changes, styling, and my notes added).

LoRAs, ControlNet, IP Adapter

- LoRA training config example https://github.com/ostris/ai-toolkit/blob/main/config/examples/train_lora_sd35_large_24gb.yaml

- ControlNet, IP Adapter especially for SD 3.5 is not yet available (this section will be updated).

List of LoRAs (will be updated).

Comparisons With Flux

These examples were created with sd3.5_large_fp8_scaled and flux1dev-Q4_K_S model to fit into VRAM, so take them with a grain of salt. However, the comparison illustrates well what you will generally encounter. All generations are 1-pass, not cherry-picked (but the examples were chosen to show the differences in the models). You may use the example workflow for further testing of other models.

(Above) SD 3.5 adheres more to the description of the scene when the prompt is more difficult. In the second example (Below), the prompt is simplified, and the scene fits more to the description in both cases (notice some issues with crowd generation in SD 3.5):

3D Scene (image above): You may see that at 28 steps, the hands are not yet finished in SD 3.5. However, the prompt adherence with a brief prompt is better. Again, ignore the texts errors in the example, it can often fail in both models.

SPEED: Flux produces a very sharp and 'finished' image in fewer steps, but since it is more resource-hungry, SD 3.5 may still be faster with double the steps.

Turbo vs. Schnell

SD 3.5 Turbo is a speed-optimized, low-step model that produces somewhat simplified outputs compared to the Large version (similar to the situation with Flux-dev vs. Flux-schnell). It can generate nice images from as few as 3-4 steps (you may use Flux Schnell strategies to get the most out of it).

With the usual default setup, Turbo tends to generate sharper, more stylized images with vivid colors. This may be what you want, but if you are going for realism, you will need to use some tricks (see above).

Tips

- Do not get discouraged too early.

- Do not take influencers too seriously.

- Enjoy these pioneering times while they last.

Conclusion

The model will reveal its strengths and weaknesses in the coming months, depending on whether it is well-received by the community and whether fine-tuning of models and LoRAs will be effectively possible (and affordable) for a wide audience of users.

It will take some time to really explore the boundaries of what is possible (and fix some issues) with the model. Good job, Stability AI—you're back on track. In my opinion, this is possibly the best Stable Diffusion base model release yet.

Fun fact: Basically all the claims from the model introduction have been confirmed during my tests and experiments in various scenarios. This is a pleasant surprise, for a change.